When it comes to SEO, you and I both know there’s no one-size-fits-all strategy. What works for one website may not move the needle for another. That’s where A/B testing comes in.

Instead of guessing which changes will improve rankings, clicks, or conversions, you can test two versions of a page or element and let the data guide your decisions.

In this post, I’ll walk you through everything you need to know about A/B testing in SEO, from the basics to the best practices that will help you avoid common mistakes.

So, without any further ado, let’s get started.

Table Of Contents

1 What is A/B Testing?

A/B testing, also known as split testing, is a method used to compare two versions of a webpage or other user experience to determine which one performs better.

By presenting version A (the control) to one group of users and version B (the variation) to another group, you can analyze how each version impacts user behaviour based on predefined metrics, such as click-through rates, conversion rates, or time spent on the page.

For instance, if you run an e-commerce website, you can test two different headlines for a product page to see which one leads to more purchases. Version A might have a headline that reads High-Quality Shoes at Affordable Prices, while version B can read Exclusive Discounts on Top-Brand Shoes.

By monitoring which headline generates more sales, you can decide which version to implement more broadly. This approach helps you to make data-driven decisions, optimizing the content and design to better meet user needs and achieve specific goals.

2 Why Should You Run SEO A/B Tests?

You and I both want our websites to perform at their best, but guessing what works can only take us so far. That’s why running A/B tests is so important.

With A/B testing, you can compare two versions of a page, whether it’s a headline, an image, a call-to-action button, or even the overall layout, to see which one your audience responds to more. Instead of relying on assumptions, you’re making choices backed by user data.

The benefits are huge: you can boost conversions, increase click-through rates, and keep visitors more engaged. At the same time, you avoid the risk of rolling out changes that can hurt your site’s performance.

For instance, you can think a bright red button will get more clicks, while I believe a softer blue one will work better. Rather than guessing, an A/B test tells us which version actually delivers results.

A/B testing gives you confidence. It takes the guesswork out of SEO and ensures that every change you make leads to measurable improvements.

3 Examples of Elements of A/B Testing for SEO

When conducting A/B testing for SEO, selecting the right elements to test can significantly impact the effectiveness of your optimization efforts.

Here are some examples of elements you can consider testing:

- Title Tags & Meta Descriptions (Impact CTR): These play a big role in whether visitors click on your result. You can test keyword placement, title length, or add power words (like Best, Ultimate, Guide) to see which version attracts more clicks.

- Heading Structure (Improves Readability & Rankings): Headings guide both users and search engines. Try testing H1 variations with primary keywords or restructuring H2/H3s to make content easier to scan and see how it affects engagement and rankings.

- URL Structure (Shorter, Keyword-Rich URLs May Perform Better): Shorter, keyword-rich URLs are usually easier to understand. You can compare shorter URLs against longer ones, or test different keyword placements to see if it helps improve CTR and rankings.

- Internal Linking Changes (Boosts Authority for Key Pages): Changing where and how you link internally can shift authority to your most important pages. Test different linking structures to see how they affect rankings and user navigation.

- Content Updates (Longer, Optimized Content vs. Existing): Adding new sections, improving structure, or expanding content can boost performance. Run tests to compare refreshed, optimized content against the older version to measure the difference.

- Schema Markup (Increases Rich Results Visibility): Structured data like star ratings, FAQs, or event details can improve visibility in search. Testing different Schema types helps you see which ones drive more traffic and engagement.

- AI Overview Optimization (Stay Visible in AI Results)

Google’s AI Overviews are reshaping search. Testing content variations, like adding concise summaries, FAQs, or clearer answers, can help you see which format increases your chances of being included. For example, you can test whether a direct answer paragraph outperforms a longer, detailed explanation in winning AI-generated visibility. - Page Speed Optimizations (Affects Bounce Rate & Rankings): Nobody likes a slow site. By testing speed improvements like image compression, caching, or minification, you can measure their direct impact on rankings and user behaviour.

By systematically testing these elements, you can gather valuable insights into what works best for your audience and make data-driven decisions to optimize your website for both users and search engines.

4 How to Perform SEO A/B Tests

SEO A/B testing helps determine which website changes positively impact search rankings, traffic, and user engagement.

Unlike traditional A/B tests (where variations run side by side), SEO testing usually happens over time: you make a change, measure results, and compare them to the original version.

Here’s a step-by-step approach to conducting SEO A/B tests effectively.

4.1 Identify the Element to Test

Start by selecting an SEO element to test, such as title tags, meta descriptions, URL structure, or internal linking. Ensure the chosen element has a measurable impact on rankings, click-through rates (CTR), or user engagement.

For instance, if you suspect that your current meta description is not compelling enough, you can test a variation with a call to action (e.g., “Learn how to improve your SEO today!”).

4.2 Choose the Right Testing Method

There are two main approaches to SEO A/B testing:

- Split URL Testing: Create two separate page versions (e.g., example.com/page1 and example.com/page2) and monitor their organic performance.

- Time-Based Testing: Make a change on a page and track performance over a set period before comparing it to the prior version’s performance.

For instance, if testing page speed improvements, you can optimize images and scripts and then measure the new page’s performance after a few weeks.

4.3 Select a Sample of Pages

For reliable results, test changes on multiple pages rather than just one. This is especially useful for websites with similar pages, such as e-commerce product listings or blog categories.

For instance, an e-commerce site might test adding structured data to a subset of product pages while keeping others unchanged to compare traffic and rankings.

4.4 Implement the Changes

Apply the test variation to half of the selected pages while keeping the other half unchanged as a control group. Ensure proper tracking through Google Analytics or Google Search Console.

For instance, if testing URL structure, you could shorten URLs on half the pages and leave the others unchanged to see if shorter URLs perform better.

4.5 Monitor Performance Metrics

Track key SEO metrics such as:

- Organic traffic (via Google Search Console)

- Click-through rate (CTR) (from SERP analysis)

- Bounce rate and dwell time (from Google Analytics)

- Keyword rankings (using an SEO tracking tool like Rank Math)

4.6 Analyze Results and Draw Conclusions

After collecting data for a few weeks or months, compare the performance of the test pages against the control group. If the change had a significant positive impact, consider rolling it out across more pages.

4.7 Iterate and Optimize

SEO is never one-and-done. Keep testing new variations, refining your strategies, and tracking results. Just avoid testing too many elements at once, or you won’t know what caused the change.

For instance, if improving meta descriptions boosted CTR, your next test might focus on tweaking title tags for even better results.

5 Best Practices for A/B Testing

Let us now discuss the best practices that you can follow for A/B testing.

5.1 Segment Your Audience Appropriately

Not all visitors behave the same way. Demographics, devices, and even location can affect how visitors interact with your site. By segmenting your audience, you can run tests that are more accurate and useful.

For instance, if you’re testing an online store layout, run separate tests for mobile and desktop users. A navigation change that works perfectly on mobile might confuse desktop users, and vice versa. Running separate tests for each group helps you improve the experience for both.

5.2 Don’t Cloak the Test Pages

Never show one version of a page to search engines and a different one to users. This practice, called cloaking, goes against search engine rules and can lead to penalties.

When running A/B tests, make sure both the original and variation pages are visible to search engines and users alike.

For instance, if you’re testing two product page versions, don’t try to hide one from Google while showing another to users. Keep everything consistent to protect your site’s reputation and keep your test results valid.

5.3 Use rel=”canonical” links

When you run A/B tests, you often create multiple versions of the same page. Search engines can see these variations as duplicate content, which may hurt your SEO. To avoid this, you should use the rel=”canonical” tag.

The canonical tag is a small piece of HTML code that you place in the <head> section of a page. It tells search engines which version of a page should be treated as the original.

For example, if you’re testing two versions of a landing page (Version A and Version B), you can set both pages to point to Version A as the canonical URL. This way, search engines know Version A is the main page, and all SEO value is credited to it.

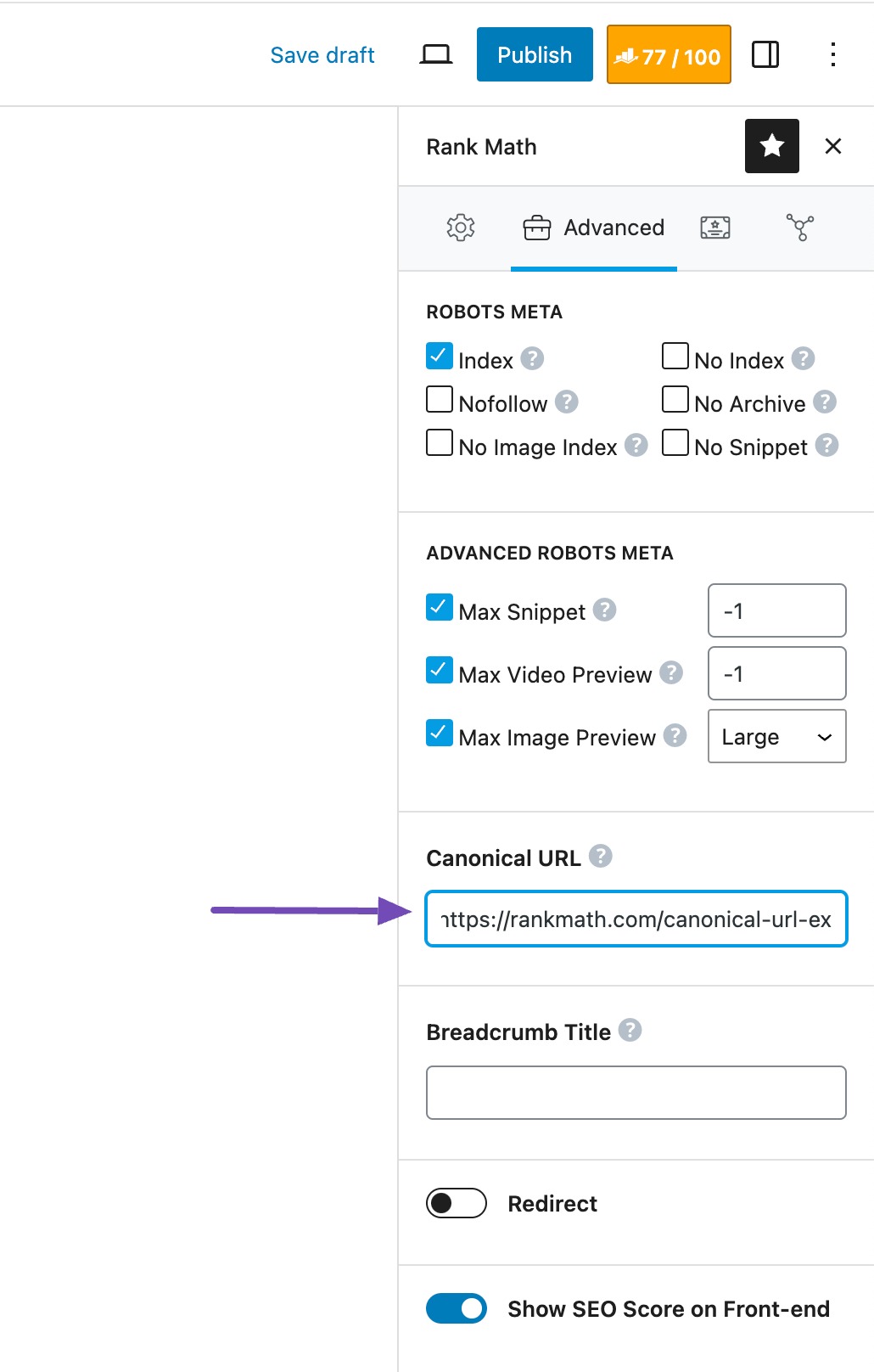

You can easily set a canonical URL in Rank Math. To do so, navigate to the Advanced tab of Rank Math in your post/page editor. If you’re unable to find the Advanced tab, please enable the advanced mode from WordPress Dashboard → Rank Math SEO → Dashboard.

Under the Advanced tab, you can change the Canonical URL field to point to the primary version of your content.

Refer to our dedicated tutorial on canonical URLs to implement and understand the canonical tags.

5.4 Use 302 Redirects, Not 301 Redirects

When running A/B tests for SEO, you should use 302 redirects instead of 301 redirects. The reason is simple: 302s are temporary, while 301s are permanent.

Let’s say you’re testing two versions of your homepage. If you set up a 301 redirect from Version A (your original page) to Version B (your test page), search engines will assume the change is permanent. This means they may pass ranking power to Version B and treat it as the main page, which could hurt your original page’s SEO.

By using a 302 redirect, you tell search engines that the change is only temporary. They’ll keep Version A in their index but still let users see Version B. This way, you can test freely without risking your site’s long-term SEO.

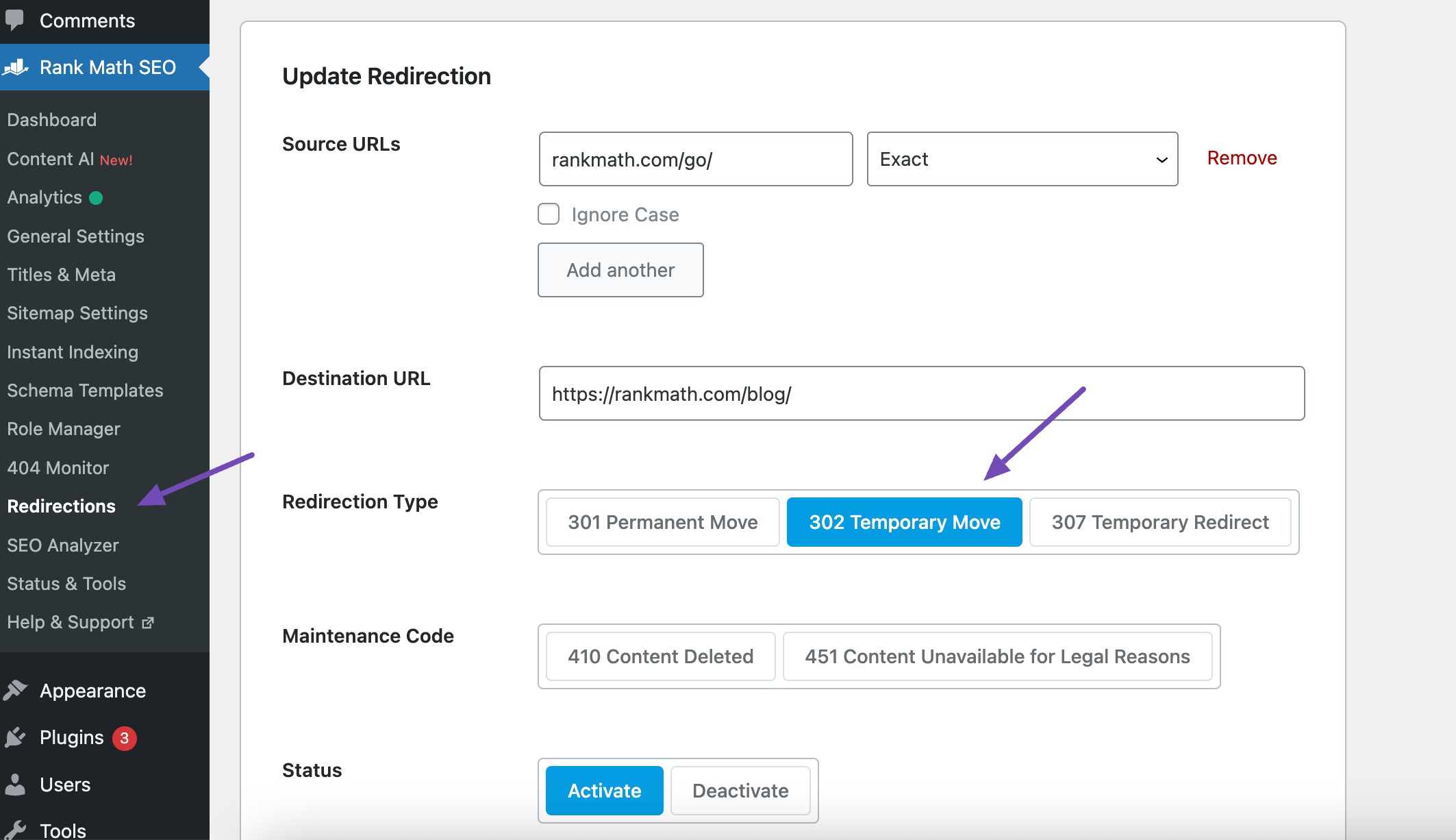

If you’re using Rank Math, setting up a 302 redirect is simple.

To do so, navigate to Rank Math SEO → Redirections module. Next, click on Add New to create a new redirection.

Add the Source URLs and the Destination URL and select the 302 Temporary Move redirect, as shown below.

Once your test ends, you can safely decide whether to make Version B permanent (with a 301 redirect) or revert to Version A, without losing SEO value along the way.

5.5 Run the Experiment Only as Long as Necessary

When you run A/B tests for SEO, timing is just as important as the changes you make. The test should run long enough to give you reliable data, but not so long that outside factors skew the results.

For example, if you’re testing two versions of a product or landing page, you’ll want to collect enough data across different days and traffic levels. Usually, a few weeks is enough. This helps ensure that the results reflect real user behaviour.

On the other hand, leaving the test running too long can backfire. Seasonal trends, new marketing campaigns, or even search engine updates can influence the outcome, making it harder to know if your page changes actually worked.

The key is to strike a balance: run the test until you have enough data to make a confident decision, then wrap it up before outside influences creep in.

6 Common A/B Testing Mistakes

Let us now discuss the common mistakes that you can avoid during A/B testing.

6.1 Testing Too Many Variables Simultaneously

One of the biggest mistakes in A/B testing is trying to test multiple changes at the same time.

For instance, if you change both the headline (Start Your Online Store Today vs. Launch Your Business with Ease) and the call-to-action button (Get Started vs. Try for Free), and conversions go up, you won’t know which change made the difference.

This uncertainty makes it hard to learn anything useful. Instead, test one element at a time. Start with the headline, then move on to the button, and so on. That way, you’ll know exactly which change improved performance and can use that insight to guide future optimizations.

6.2 Not Giving Your Tests Enough Time to Run

One of the biggest mistakes with A/B testing is cutting it short. These tests need enough time to gather reliable data and to reflect how visitors behave across different days or situations.

If you stop too early, you may end up with results that don’t tell the full story. For instance, running a test for only three days might miss natural weekly patterns or outside influences like a holiday sale or a sudden traffic spike from a campaign.

The fix is simple: let your tests run long enough. Base the duration on how much traffic your site gets and the level of statistical confidence you need. This way, the results you rely on are more accurate and truly reflect your audience’s behaviour.

6.3 Neglecting Seasonality and External Factors

When you run A/B tests, it’s easy to overlook how seasonality and outside influences can affect the results. Seasonality includes predictable changes in user behaviour, like holiday shopping, back-to-school sales, or summer vacations.

External factors are less predictable, such as major news events, economic shifts, or new competitor campaigns.

If you don’t account for these, you might think your test changes caused a spike or drop in performance, when in reality, it was due to these outside influences. For instance, if you test a new checkout layout on your store during the holiday season, higher conversions can simply reflect seasonal shopping habits, not the effectiveness of your test.

To avoid this, plan your tests with seasonal trends in mind and watch for outside events that can skew results. If something unexpected happens, like a sudden news event or market change, pause your test or extend it until things stabilize so you can gather more accurate data.

6.4 Focusing Solely on Positive Outcomes

If you only look at tests that show good results, you’re missing half the picture. Negative or neutral outcomes are just as valuable because they show you what doesn’t work and save you from repeating the same mistakes.

Ignoring these results can also give you a false sense of progress, making you overly confident in your testing approach.

To truly understand your audience, you need to review every outcome, not just the wins. This way, you’ll build a clearer view of what drives real results.

6.5 Misinterpreting Results

One of the common mistakes you can make in A/B testing is misinterpreting the results. This usually happens when you don’t fully understand statistical significance or ignore outside factors that can influence the outcome.

If you act on misread data, you can make changes that hurt your site’s performance instead of improving it. To avoid this, make sure your tests run long enough to reach statistical significance and always double-check the context before drawing conclusions.

6.6 Not Accounting for User Experience

Another common pitfall is focusing only on numbers like conversion rates or click-throughs while overlooking the bigger picture, how your users actually feel.

For example, a test might show that adding an aggressive pop-up boosts email sign-ups. But if that pop-up annoys visitors, it can increase bounce rates, shorten time on site, and push visitors away from your brand in the long run.

To prevent this, balance the data with user feedback. Run surveys, collect usability insights, and keep an eye on engagement patterns. By combining performance metrics with user experience, you’ll make improvements that not only boost short-term results but also keep visitors coming back.

6.7 Overlooking Audience Segmentation

If you run tests on your entire audience without breaking them into smaller groups, the results can be misleading. What works for one group of users may not work for another. For instance, a younger audience might prefer a bold design, while older users may find it distracting.

When you ignore segmentation, you risk making changes that don’t actually improve the experience for everyone. Instead, you should identify key audience groups, such as new visitors vs. returning customers, and analyze how each group responds. This way, your test results will be more accurate, and your changes will be more effective for the right audience.

6.8 Inconsistent Implementation

Another common mistake is rolling out test variations inconsistently. If not all users see the variation under the same conditions, your results won’t be reliable.

For instance, imagine you’re testing a new homepage layout. If some visitors see the new design while others still see the old one because of a technical glitch, your data won’t reflect the impact of the change. Similarly, if you’re testing two different call-to-action buttons but one page loads faster than the other, the results may be influenced by the speed, not the button itself.

To avoid this, make sure you use a reliable A/B testing tool that delivers variations consistently. Before going live, double-check everything with thorough quality assurance to ensure only the intended variable is being tested.

6.9 Not Considering Core Web Vitals in Testing

Core Web Vitals, like page load speed, interactivity, and visual stability, play a big role in user experience. If you ignore them in your A/B testing, your results may give you the wrong picture.

For instance, a variation with a better design might win in clicks but lose users if it loads slower or shifts elements around the page. Visitors may abandon the page before they even see your test changes.

To prevent this, always monitor Core Web Vitals alongside your tests. Make sure your variations don’t negatively impact speed or usability. This will give you a more realistic view of how your changes affect users.

7 Conclusion

A/B testing in SEO isn’t just about running experiments; it’s about making smarter decisions that move you closer to your goals. When you take the time to test carefully, avoid common mistakes, and apply best practices, you give yourself the chance to truly understand what works for your audience.

Remember, the goal isn’t to change everything at once but to make small, meaningful improvements that add up over time. By approaching A/B testing with patience and attention to detail, you’ll not only improve your rankings and traffic but also create a better experience for your visitors.

Now it’s your turn. Start with one test, keep learning from the results, and use those insights to shape your SEO strategy step by step. The more consistent you are, the more powerful your testing becomes.

If you like this post, let us know by tweeting @rankmathseo.