If you’ve ever seen the “Blocked by robots.txt” error in your Google Search Console and in the Index Status report of Rank Math’s analytics, you know it can be pretty frustrating. After all, you’ve followed all the rules and made sure your website was optimized for search engines like Google or Bing. So why is this happening?

In this knowledgebase article, we’ll show you how to fix the “Blocked by robots.txt” error, as well as explain what this error means and how to prevent it from happening again in the future.

Let’s get started!

Table of Contents

1 What Does the Error Mean?

The “Blocked by robots.txt” error means that your website’s robots.txt file is blocking Googlebot from crawling the page. In other words, Google is trying to access the page but is being prevented by the robots.txt file.

This can happen for a number of reasons, but the most common reason is that the robots.txt file is not configured correctly. For example, you may have accidentally blocked Googlebot from accessing the page, or you may have included a disallow directive in your robots.txt file that is preventing Googlebot from crawling the page.

2 How to Find the “Blocked by robots.txt” Error

Luckily, the ‘Blocked by robots.txt’ error is pretty easy to find. You can use either the Google search console or the Index Status report in Rank Math’s Analytics to find this error.

2.1 Use Google Search Console to Find the Error

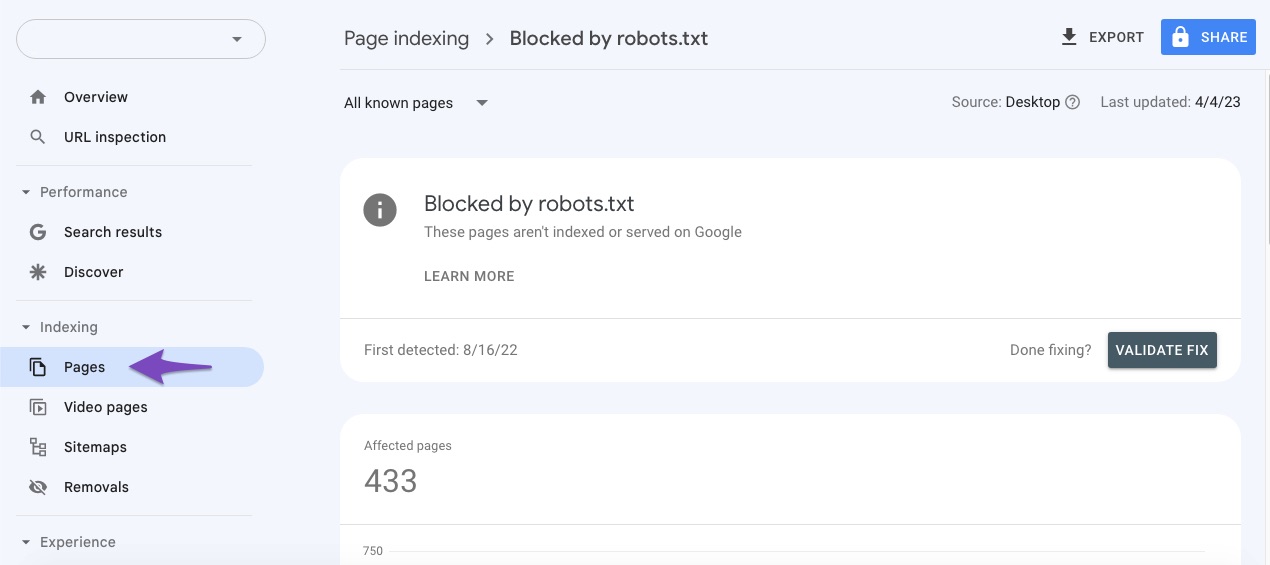

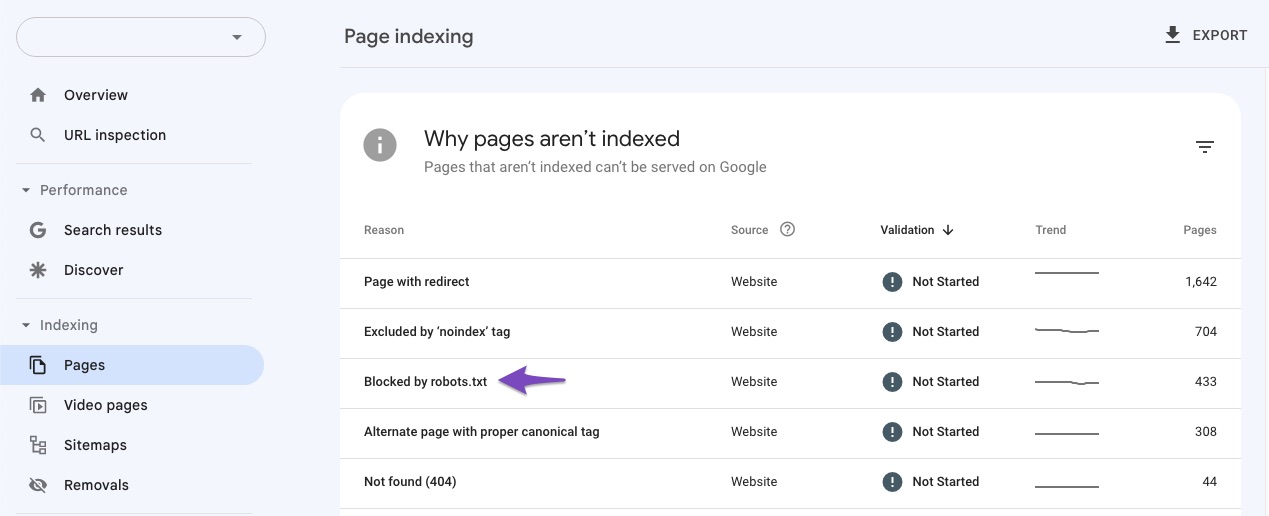

To check if you have this error in your Google Search Console, simply go to the Pages and click on the Not indexed section.

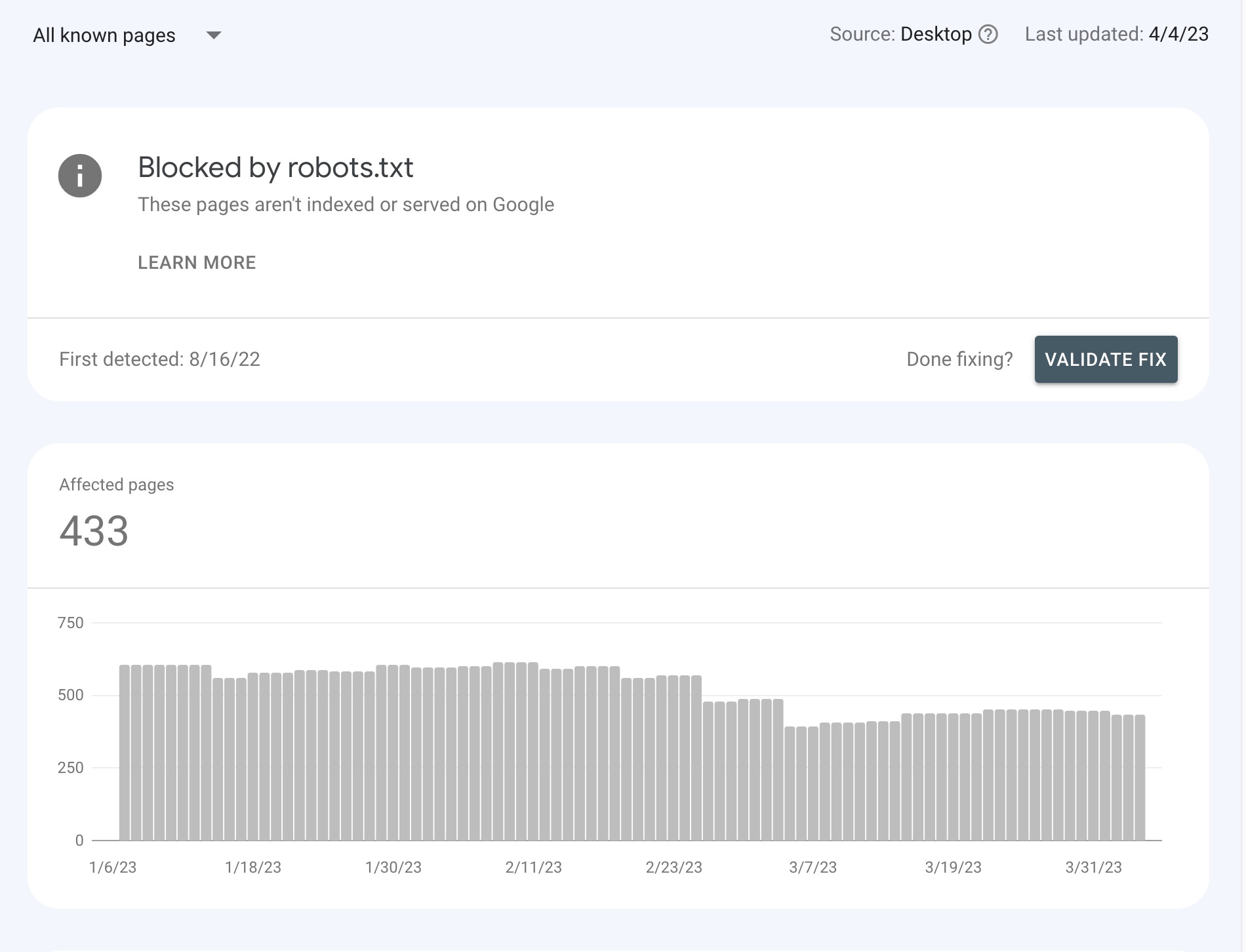

Then, click on the Blocked by robots.txt error as shown below:

If you click on the error, you’ll see a list of the pages that are being blocked by your robots.txt file.

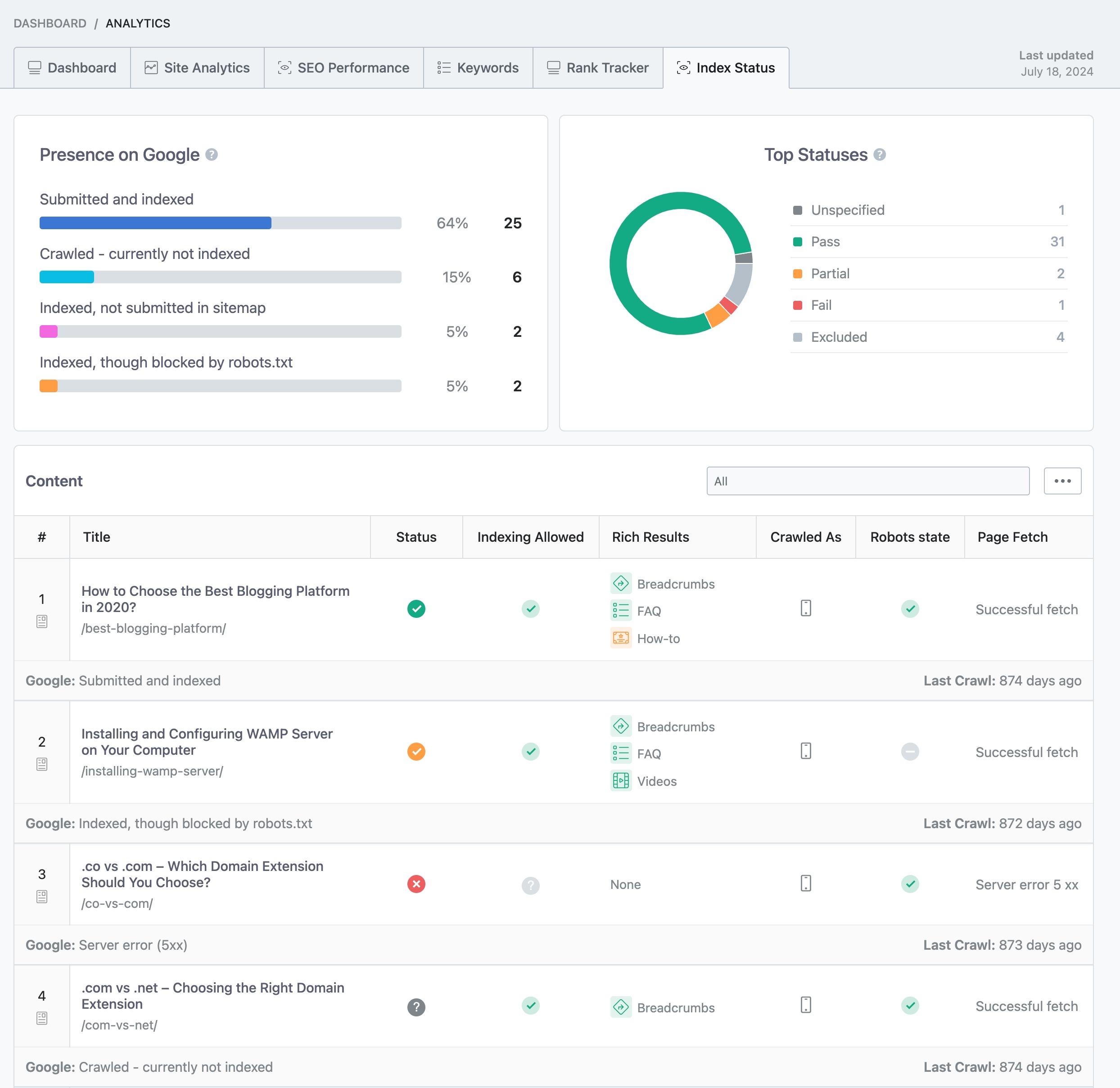

2.2 Use Rank Math’s Analytics to Identify Pages with the Problem PRO

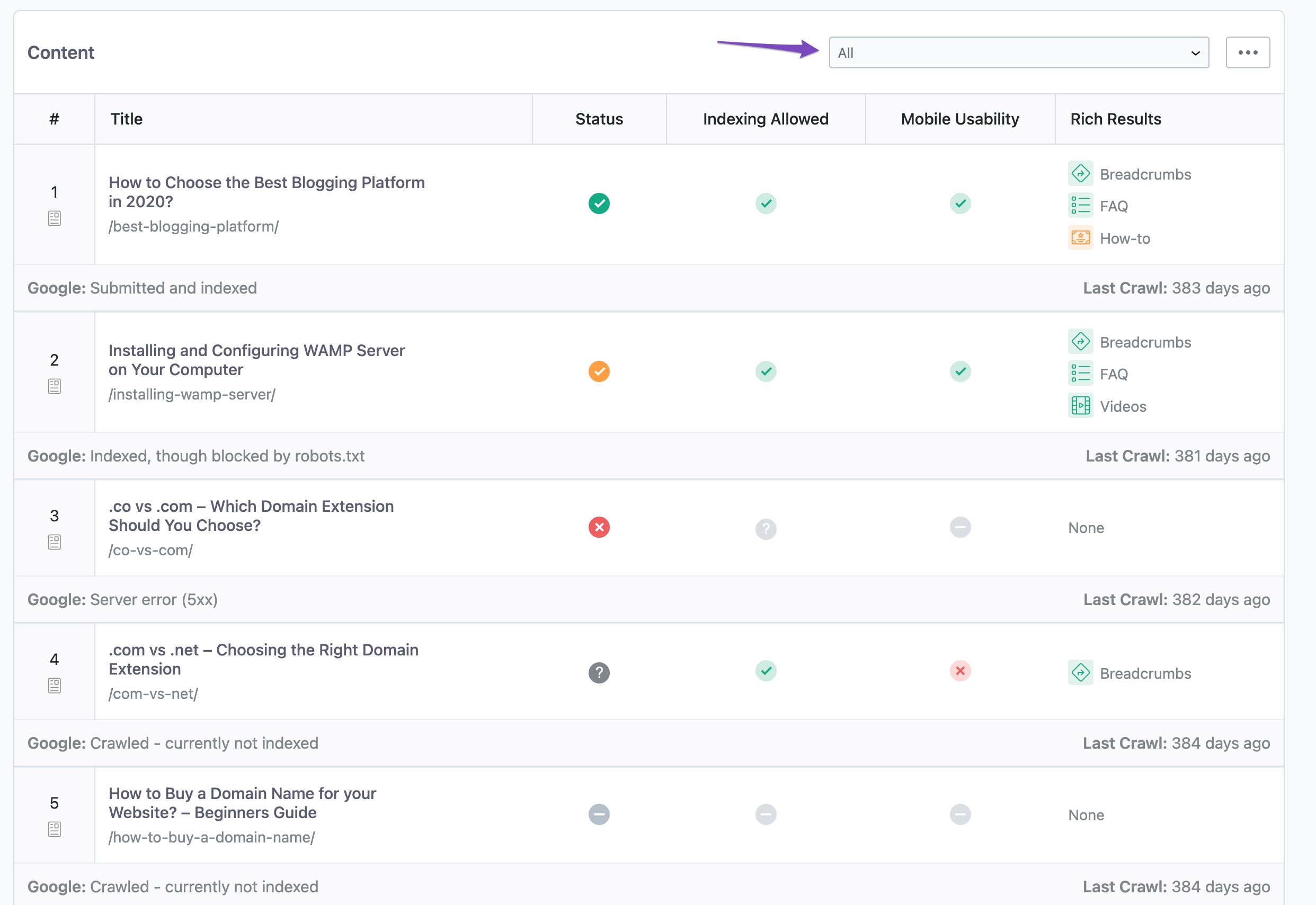

You can also use the Index Status report in Rank Math’s Analytics to identify the pages with the problem.

To do so, navigate to Rank Math SEO → Analytics in the WordPress dashboard. Next, click on the Index Status tab. Under this tab, you’ll get the real data/status of your pages as well as their presence on Google.

Moreover, if you’re using the Rank Math PRO, you can filter the post’s index status using the drop-down menu. When you select a specific status, say “Blocked by robots.txt,” you’ll be able to see all posts that share the same index status.

Once you have the list of pages that are returning this status, you can start to troubleshoot and fix the issue.

3 How to Fix the “Blocked by robots.txt” Error

In order to fix this, you will need to ensure that your website’s robots.txt file is configured correctly. You can use the robots.txt testing tool to check your file and ensure that no directives are blocking Googlebot from accessing your site.

If you find directives in your robots.txt file that are blocking Googlebot from accessing your site, you will need to remove them or replace them with more permissive ones.

Let’s see how you can test your robots.txt file and make sure that there are no directives blocking Googlebot from accessing your site.

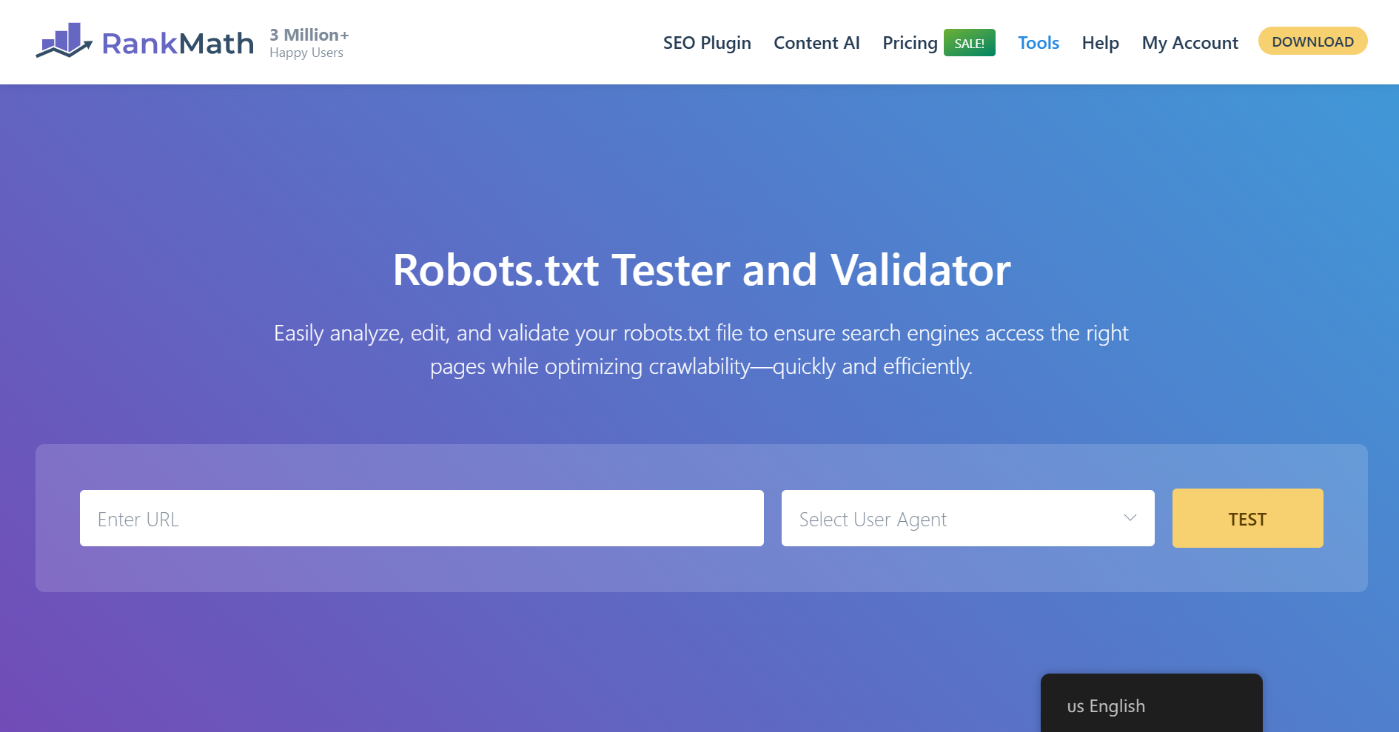

3.1 Open robots.txt Tester

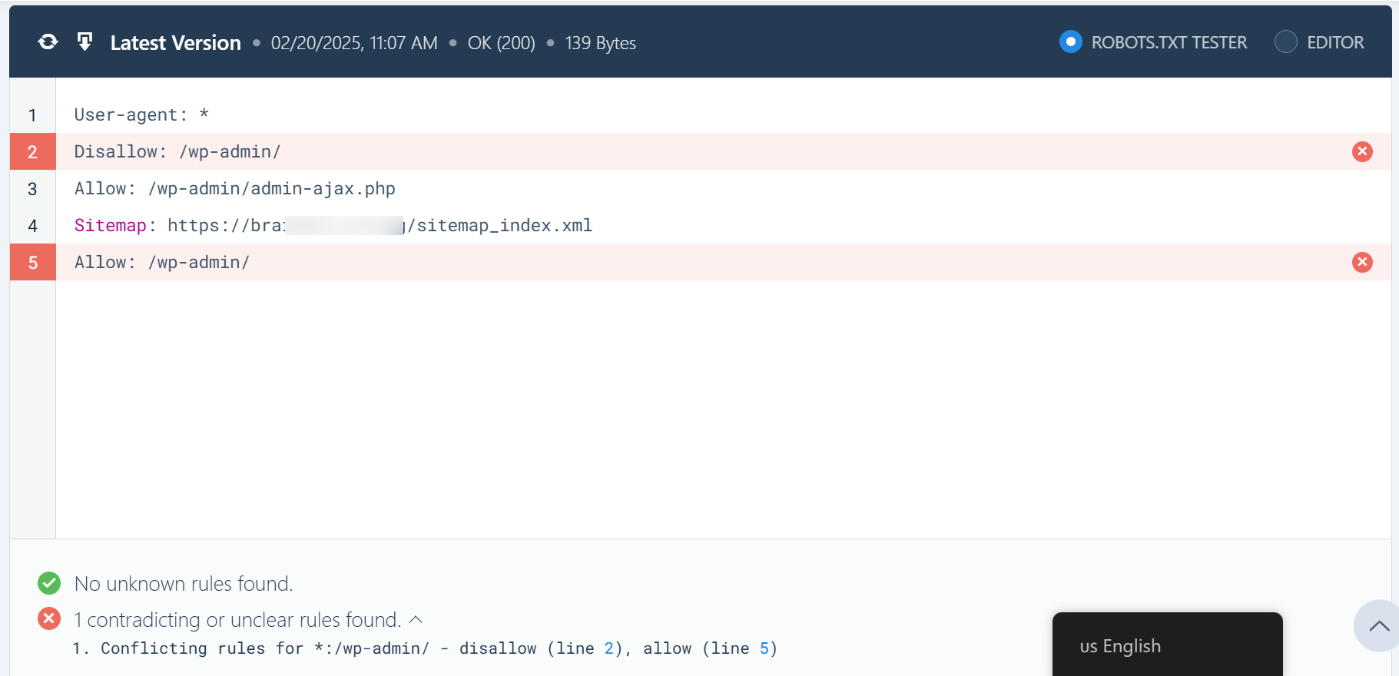

At first, head over to the robots.txt testing tool. Here is what it would look like.

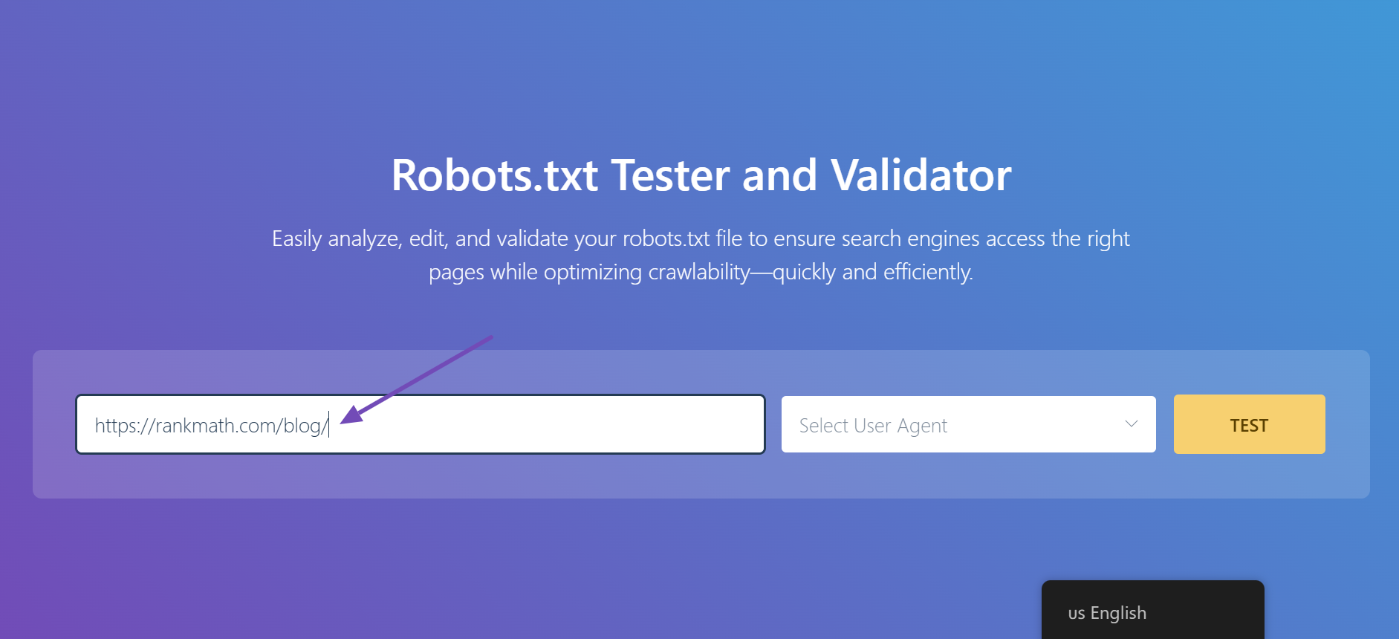

3.2 Enter the URL of Your Site

First, you will find the option to enter a URL from your website for testing.

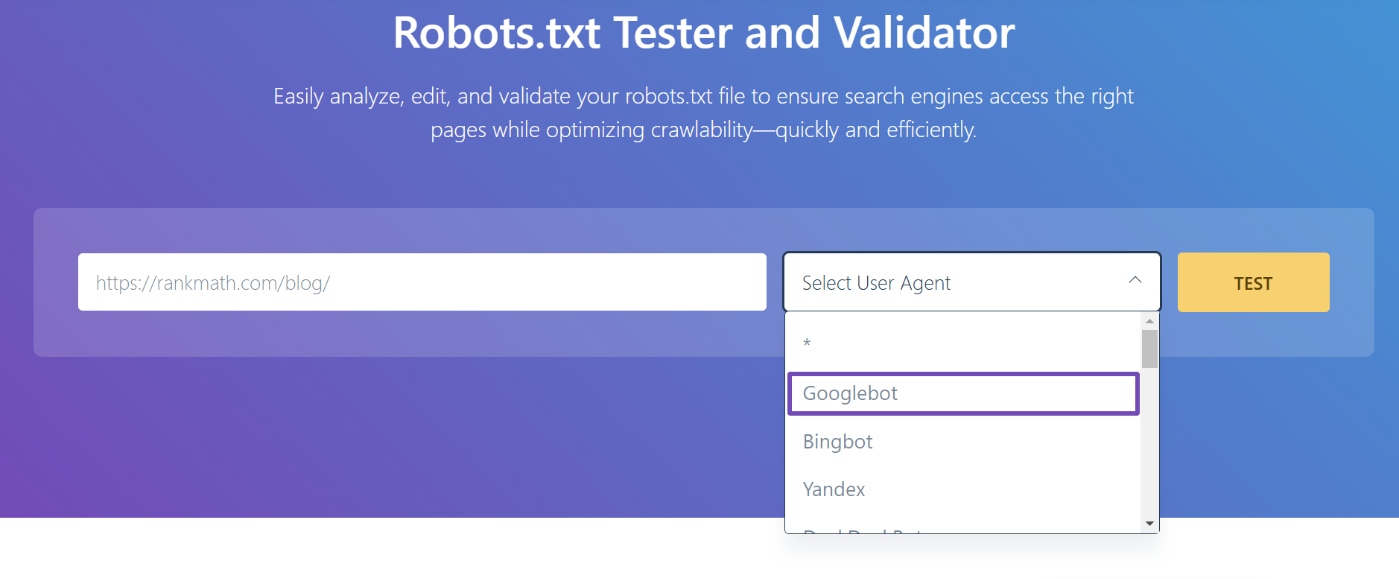

3.3 Select the User-Agent

Next, you will see the dropdown arrow. Click on it, and select the user agent you want to simulate (Googlebot, in our case).

3.4 Validate Robots.txt

Finally, click the TEST button.

The crawler will instantly validate if it has access to the URL based on the robots.txt configuration and accordingly process the test.

The code editor available at the center of the screen will also highlight any unclear rule in your robots.txt, as shown below.

3.5 Edit & Debug

If the robots.txt Tester finds any rule preventing access, you can try editing the rule right inside the code editor and then run through the test once again.

You can also refer to our dedicated knowledgebase article on robots.txt to learn more about the accepted rules, which would be helpful in editing the rules here.

If you happen to fix the rule, then it’s great. But please note this is a debugging tool, and any changes you make here will not be reflected on your website’s robots.txt unless you copy and paste the contents to your website’s robots.txt.

3.6 Edit Your Robots.txt With Rank Math

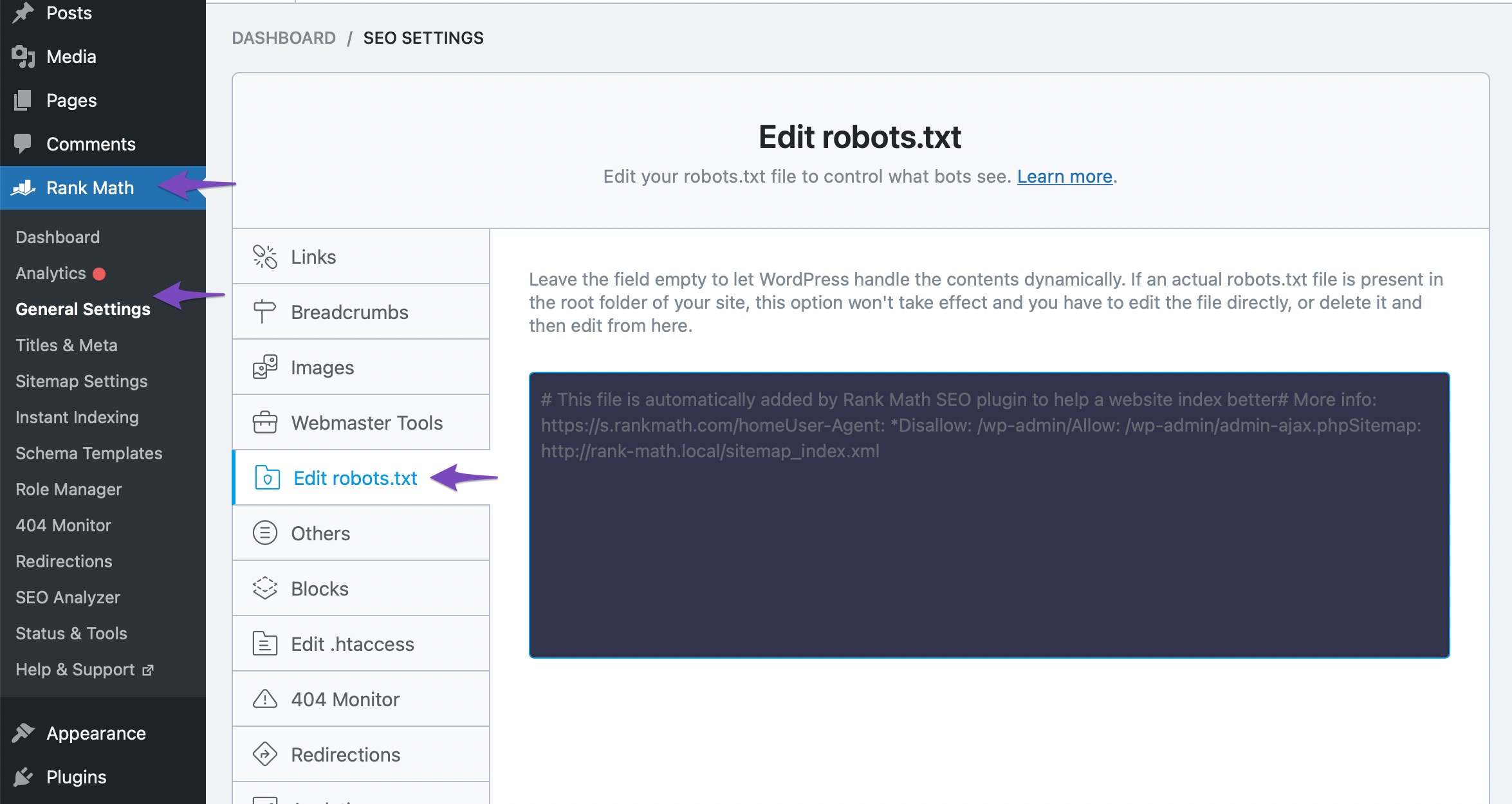

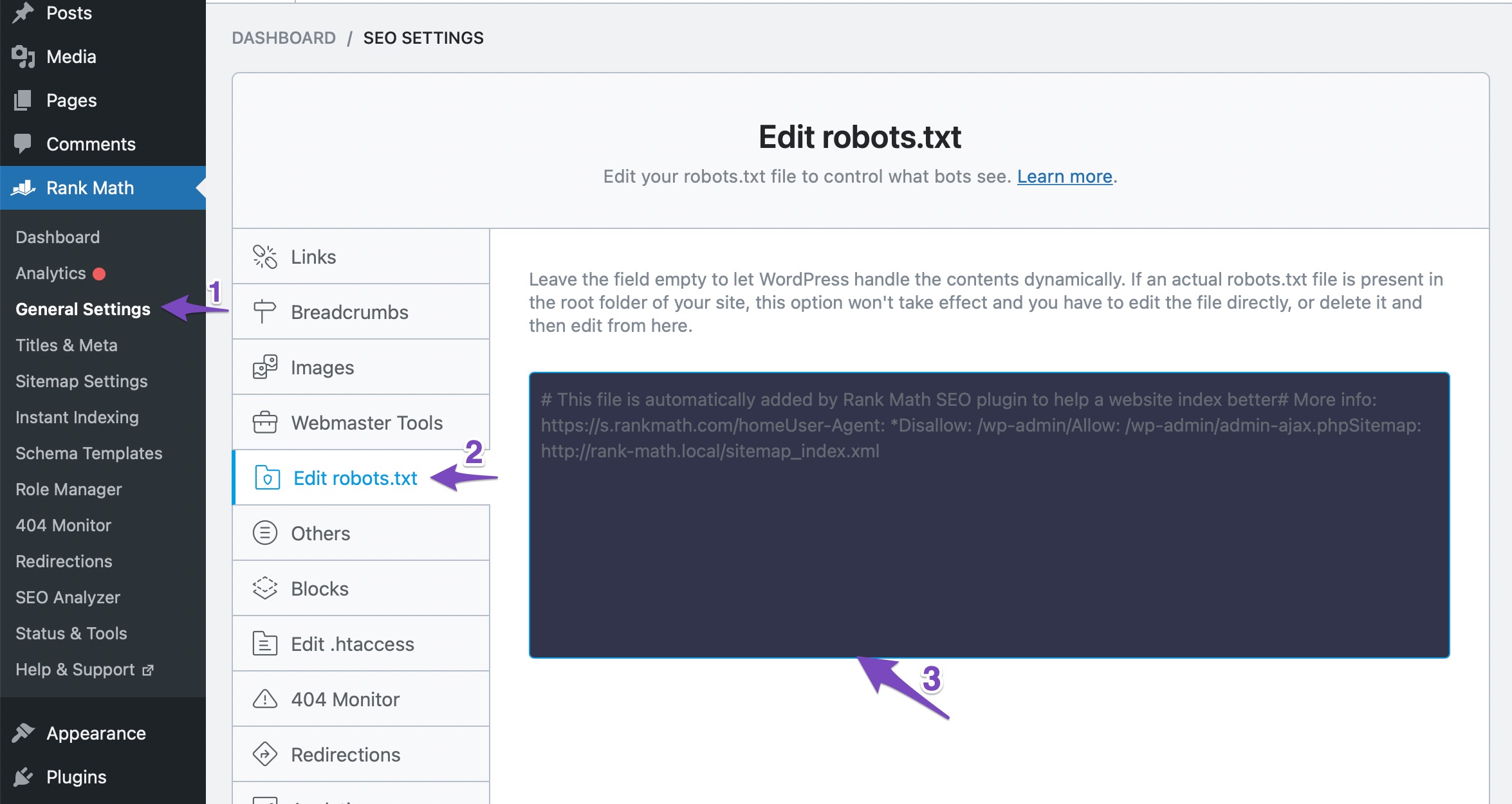

To do this, navigate to your robots.txt file in Rank Math, which is located under WordPress Dashboard → Rank Math SEO → General Settings → Edit robots.txt as shown below:

Note: If this option isn’t available for you, then ensure you’re using the Advanced Mode in Rank Math.

In the code editor that is available in the middle of your screen, paste the code you’ve copied from robots.txt tester and then click the Save Changes button to reflect the changes.

Caution: Please be careful while making any major or minor changes to your website via robots.txt. While these changes can improve your search traffic, they can also do more harm than good if you are not careful.

For further ado, see the screenshots below:

That’s it! Once you’ve made these changes, Google will be able to access your website, and the “Blocked by robots.txt” error will be fixed.

4 How to Prevent the Error From Happening Again

To prevent the “Blocked by robots.txt” error from happening again in the future, we recommend reviewing your website’s robots.txt file on a regular basis. This will help to ensure that all directives are accurate and that no pages are accidentally blocked from being crawled by Googlebot.

We also recommend using a tool like Google Search Console to help you manage your website’s robots.txt file. It will allow you to monitor your robots.txt file status and issues easily, as well as submit pages for indexing, view crawl errors, and more.

5 Conclusion

In the end, we hope that this article helped you learn how to fix the “Blocked by robots.txt” error in Google Search Console and in the Index Status report of Rank Math’s analytics. If you have any doubts or questions related to this matter, please don’t hesitate to reach out to our support team. We are available 24×7, 365 days a year, and are happy to help you with any issues that you might face.