Your robots.txt file is the first stop for search engine crawlers visiting your site. When the file has an issue, it may cause serious technical SEO problems that will hurt your rankings or worse. So, it is always a great idea to fix any robots.txt issue immediately.

Please note that Rank Math creates a virtual robots.txt file and lets you handle it through the WordPress dashboard. And this means you do not require a physical robots.txt file in your website’s root folder.

In this knowledgebase article, we will show you some common robots.txt issues while using Rank Math and how you can fix them.

Table Of Contents

1 The robots.txt File is Not Writable

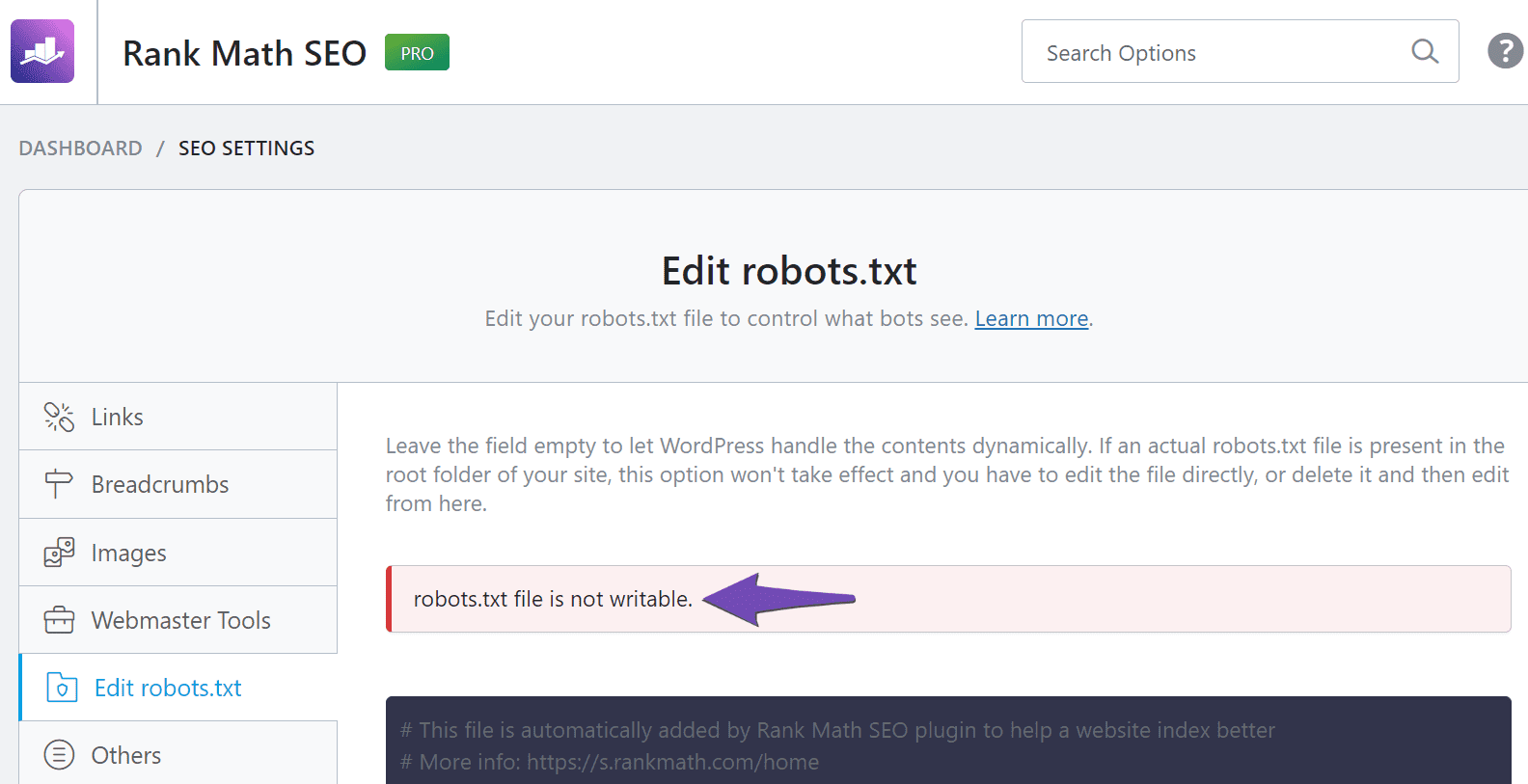

In the Edit robots.txt settings, you may encounter a robots.txt file is not writable error message, as shown below.

This error is displayed when Rank Math cannot modify your robots.txt file. To solve this, add the below code to your site. It will allow Rank Math to edit your robots.txt file. You can refer to this guide to add filters to your site.

/**

* Allow editing the robots.txt & htaccess data.

*

* @param bool Can edit the robots & htacess data.

*/

add_filter( 'rank_math/can_edit_file', '__return_true' );Optionally, navigate to your wp-config.php file and search for the below code.

Note: You will locate the wp-config file in the root folder of your WordPress installation. You can access your root folder with an FTP application or directly in cPanel. If you are unable to access your wp-config.php file, you should contact your host for support.

define (‘DISALLOW_FILE_EDIT’, true);If the code exists, you should replace it with the below code. If it does not exist, add the below code to the wp-config.php file.

define ('DISALLOW_FILE_EDIT', false);2 The robots.txt URL Returns a 404 Error or a Blank Page

Rank Math automatically generates a robots.txt file at https://yourdomain.com/robots.txt.

When the URL returns a blank page or a 404 error, it may indicate the presence of a second robots.txt file in your site’s root directory. This other file conflicts with Rank Math’s robots.txt file, causing the robots.txt URL to return an error.

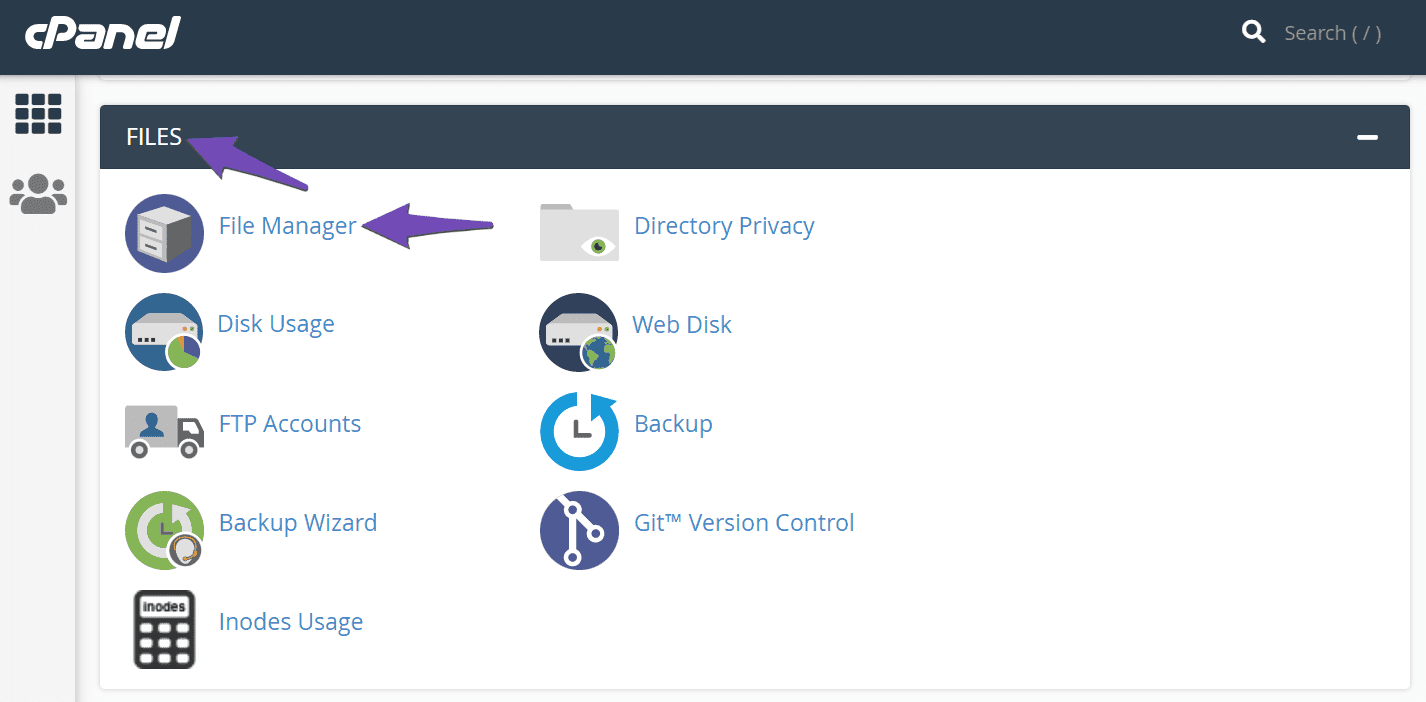

To confirm if your site contains another robots.txt file, log into your WordPress root directory using cPanel or an FTP application. If you use cPanel, head over to FILES → File Manager, as shown below.

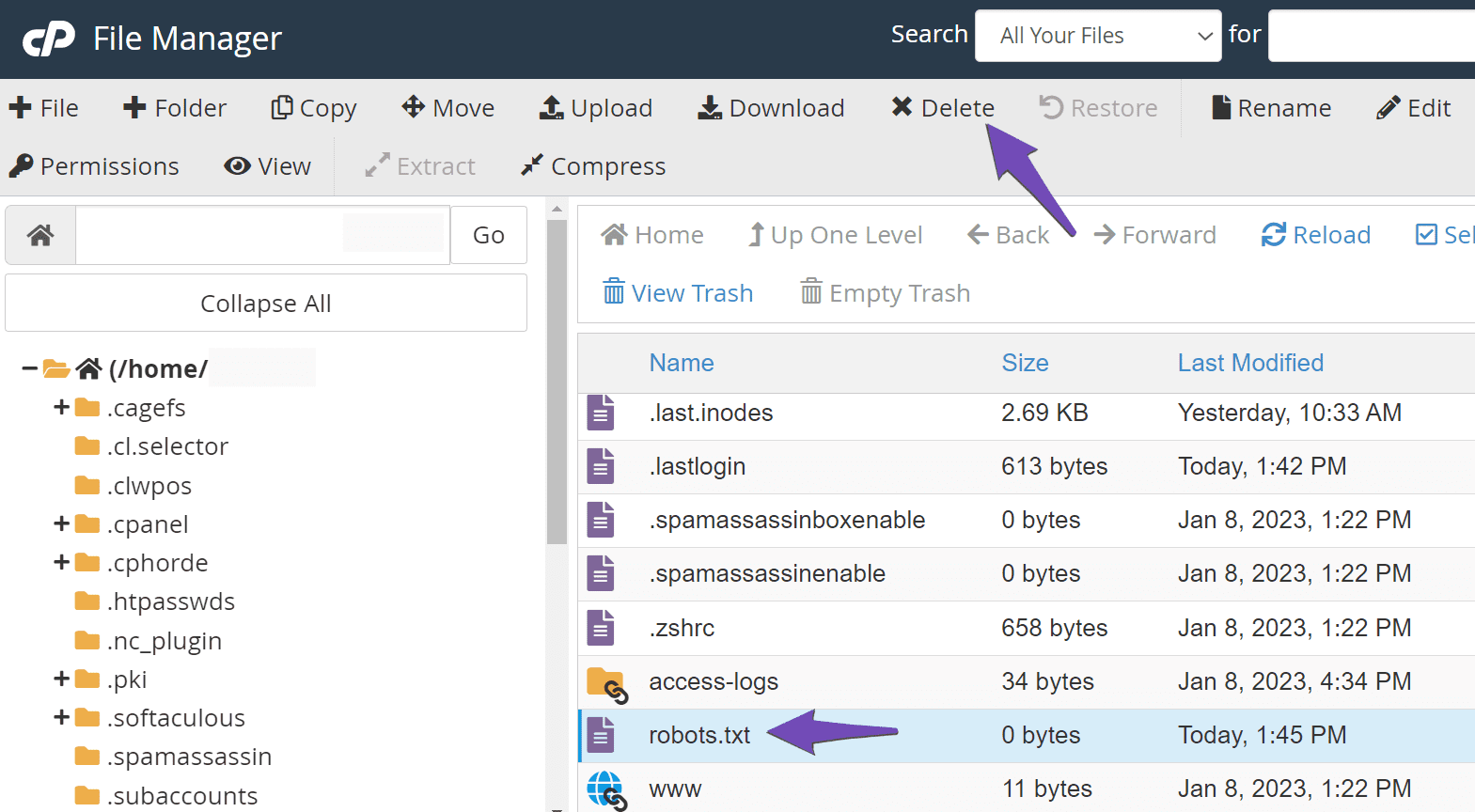

You will be presented with the root directory of your WordPress site. The next step is to check whether it contains a file named robots.txt.

If a robots.txt file is present, select it and click Delete, as shown below.

Once done, clear your website and server-level cache and test your robots.txt URL again.

If your root directory does not contain a robots.txt file, then you may be experiencing redirection issues. To solve this, add the applicable rewrite rule to the .htaccess file on your site. You can refer to this guide on how to edit your .htaccess file.

If your site runs on an Nginx server, add the below rewrite rule.

# START Nginx Rewrites for Rank Math Robots.txt File

rewrite ^/robots.txt$ /?robots=1 last;

rewrite ^/([^/]+?)-robots([0-9]+)?.txt$ /?robots=$1&robots_n=$2 last;

# END Nginx Rewrites for Rank Math Robots.txt FileIf your site runs on an Apache server, add the below rewrite rule.

# START of Rank Math Robots.txt Rewrite Rules

RewriteEngine On

RewriteBase /

RewriteRule ^robots.txt$ /index.php?robots=1 [L]

# END of Rank Math Robots.txt Rewrite Rules3 The Edit robots.txt Setting is Missing

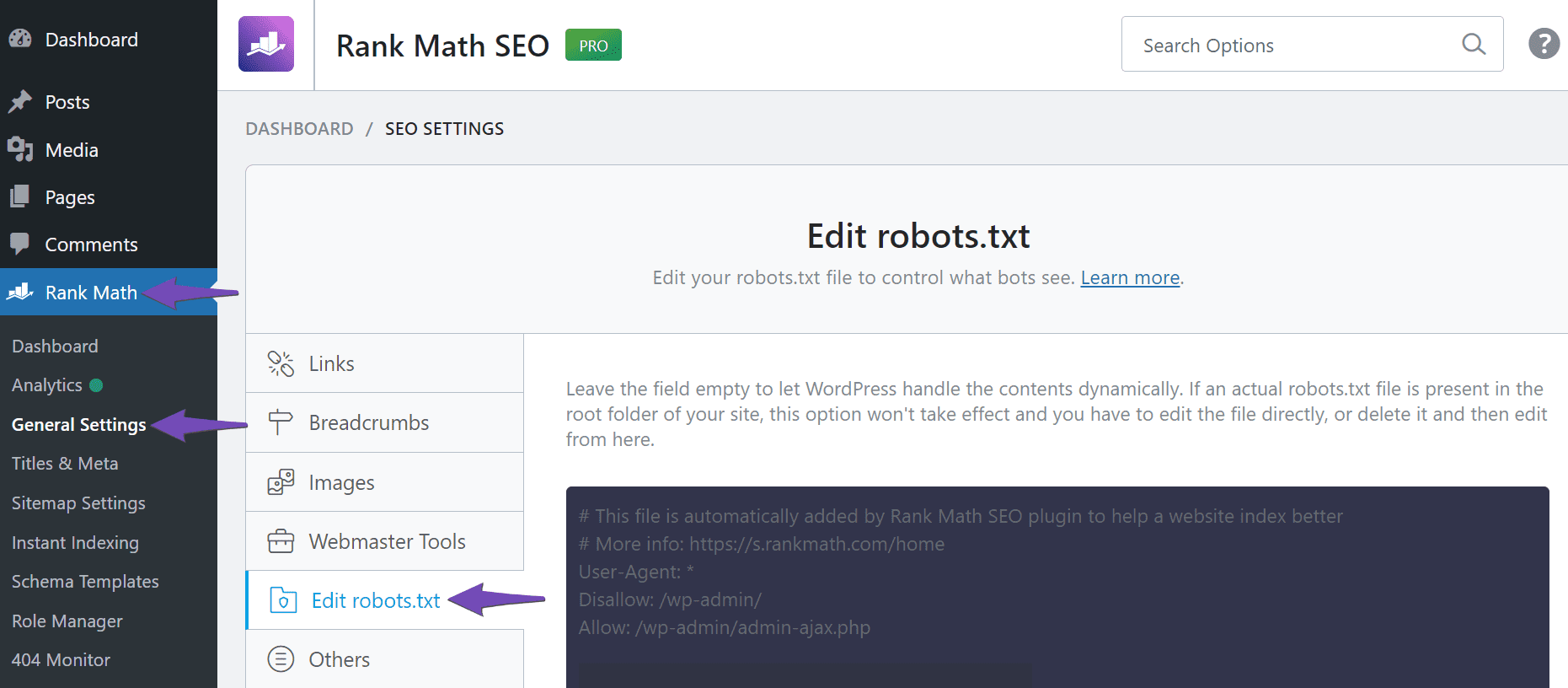

The option to edit your robots.txt file is located at Rank Math SEO → General Settings → Edit robots.txt, as shown below.

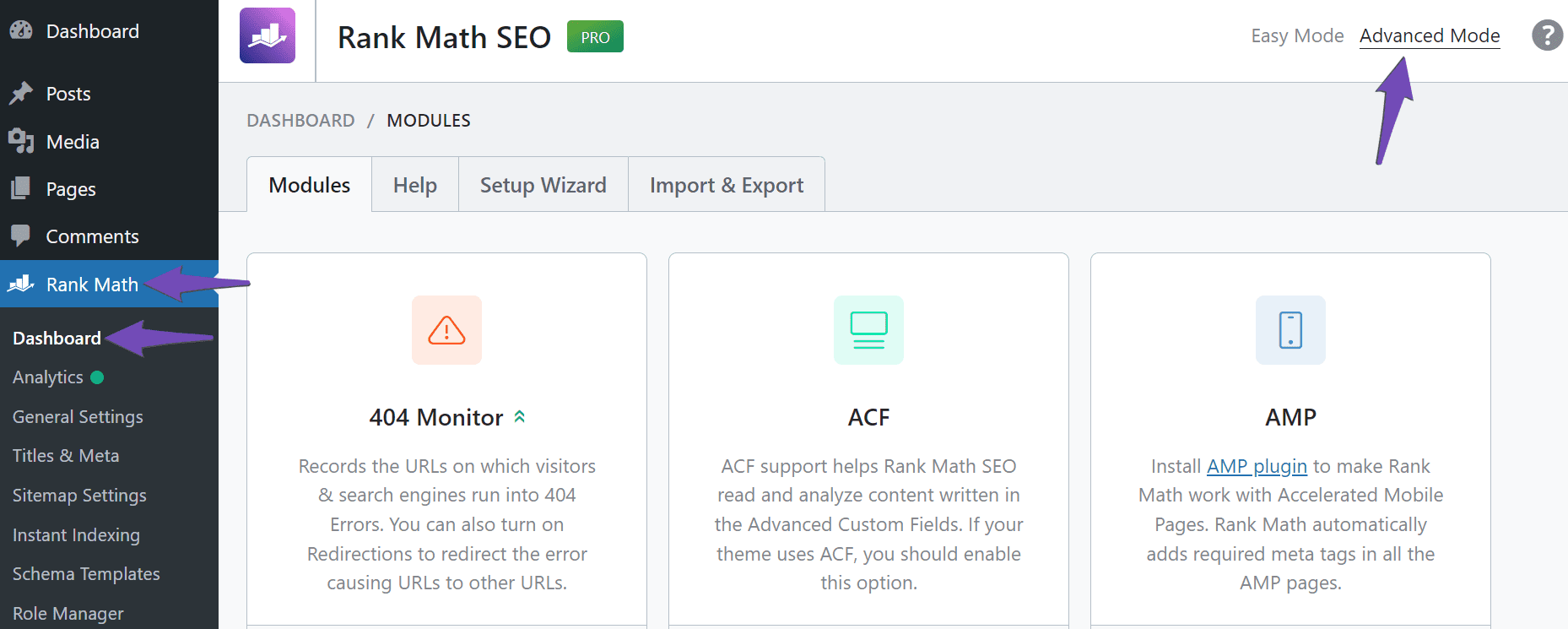

If the Edit robots.txt option is not displayed, then you are using Rank Math in Easy Mode. To change this, navigate to WordPress Dashboard → Rank Math SEO and enable Advanced Mode, as shown below.

The Edit robots.txt option will now be displayed.

4 robots.txt is Blocking Google From Crawling My Site

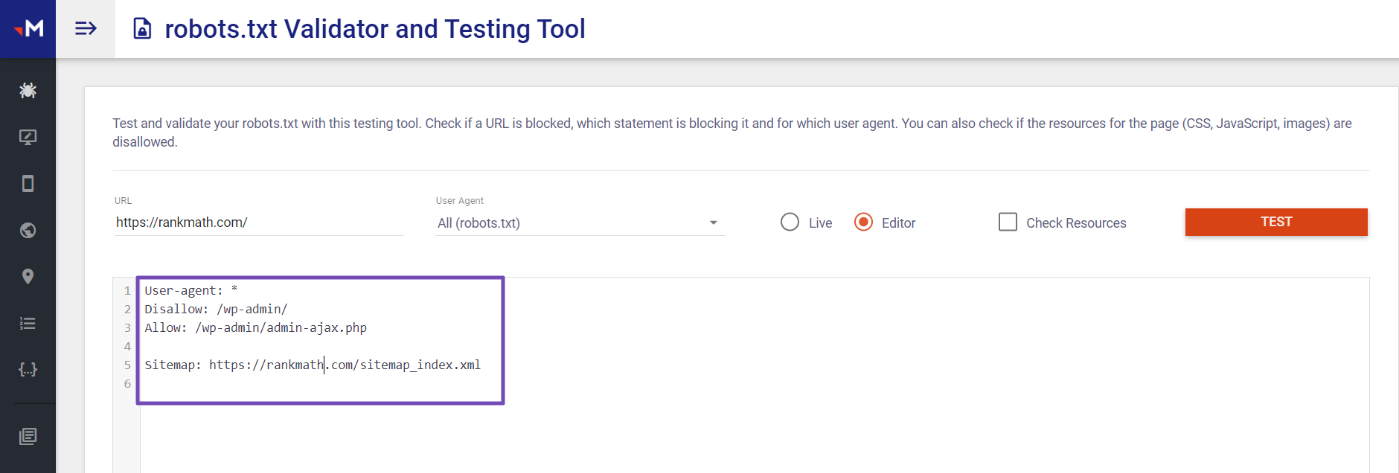

If you think your robots.txt file is blocking Google from crawling your site, navigate to this Robots Testing Tool. Type in your URL, choose the “All (robots.txt)” option as the User Agent from the dropdown list and click the TEST button.

Your robots.txt file will be displayed, as shown below.

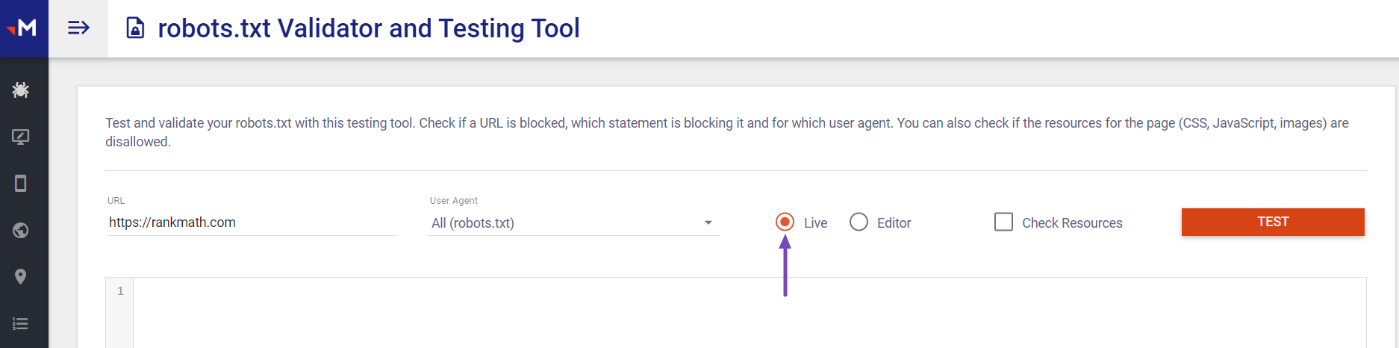

If you have previously used the Robots Testing Tool, an earlier version of your robots.txt file may be displayed. This version may differ from your current robots.txt file, especially if you have updated the file since your last visit.

To ensure that you are viewing the latest version of your robots.txt file, it is advisable to clear your website cache and test your robots.txt file again. Additionally, check the Live option before testing the robots.txt, as shown below.

Once done, your current robots.txt file will be displayed. The next step is to check whether the file contains the below rule.

Note: The below rule blocks the crawler specified in the User-Agent field above it from crawling your site. If the User-Agent is set to Googlebot, your robots.txt file will stop Google from crawling your site. If the User-Agent is set to *, it will stop every bot, including Google, from crawling your site.

Disallow: /

If the above rule exists, then you need to edit your robots.txt file to remove it. You can refer to this guide on editing robots.txt with Rank Math. If you are unable to edit your robots.txt file, you can refer to this guide to troubleshoot the issue.

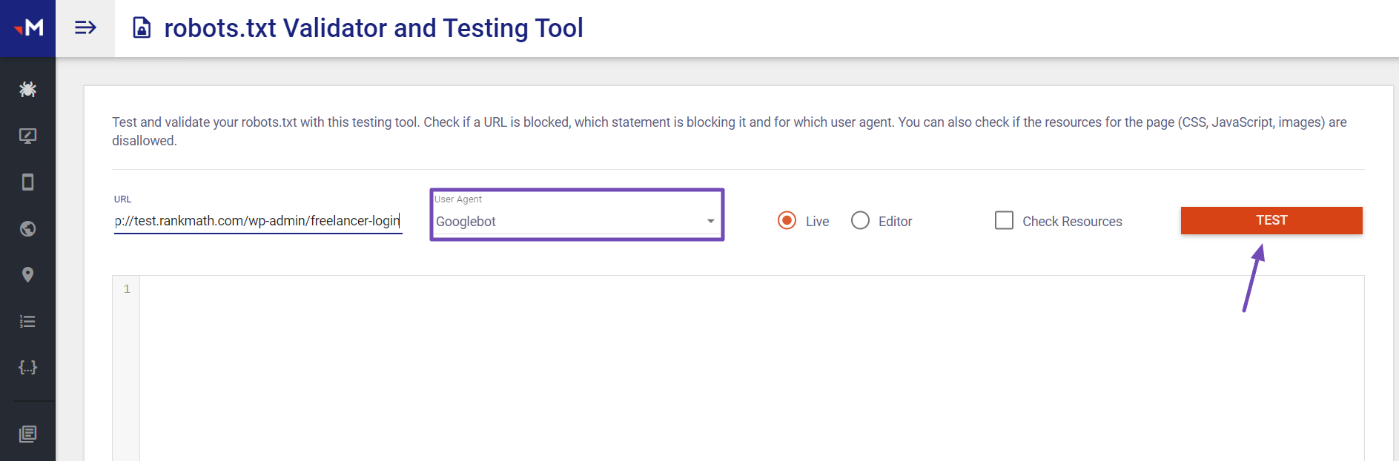

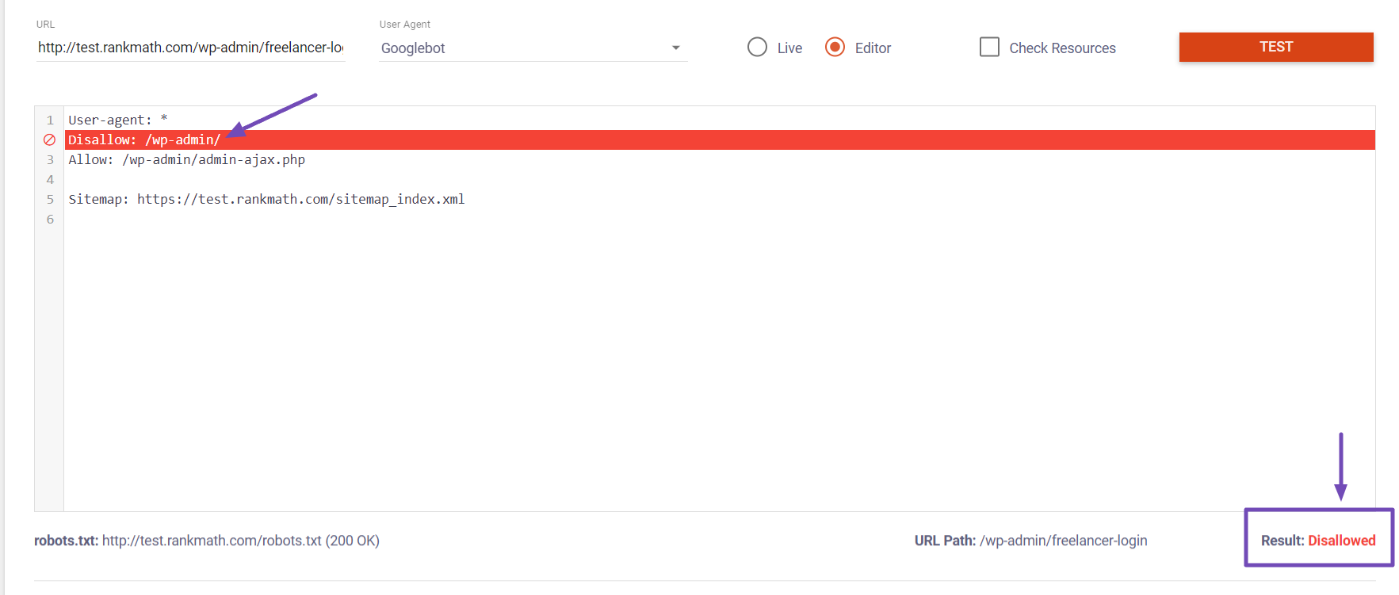

You can also use the Robots Testing Tool to check if Google is blocked from crawling a specific URL on your site. To do that, enter the URL in the provided field and then choose ‘Googlebot’ as the User Agent from the dropdown list. Once you’ve done that, click on the TEST button.

So, if Google is blocked from crawling the URL, the tool will return a Disallowed result and highlight the blocking rule, as shown below.

5 Robots.txt Error in PageSpeed Insights

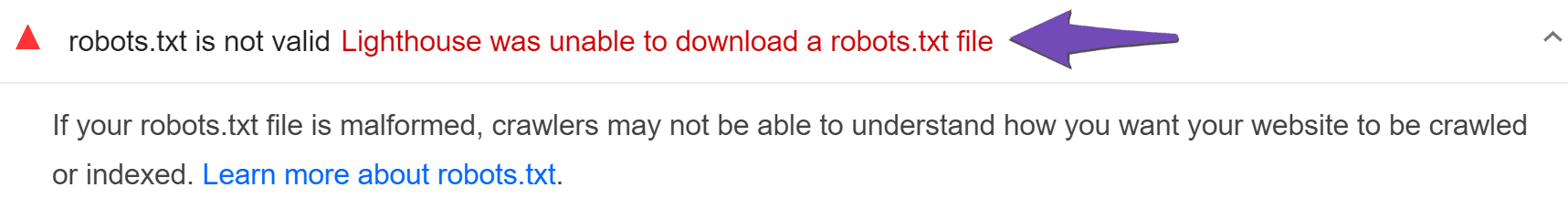

If you inspect a URL in PageSpeed Insights, it may return a robots.txt is not valid – Lighthouse was unable to download a robots.txt file error message, as shown below.

This can happen when you use a content delivery network (CDN) like Cloudflare. Rank Math does not create a physical robots.txt file on your site. Instead, the file is created and displayed when a user visits your robots.txt URL. So you can safely ignore this error if your robots.txt file is available at https://yourdomain.com/robots.txt.

Optionally, you can add the below rule to your robots.txt file and test it again in PageSpeed Insights.

Disallow: /cdn-cgi/And these are the fixes to the common robots.txt issues you may encounter on your site. We hope you are able to solve your robots.txt issue. If you have questions, you’re always more than welcome to contact our dedicated support team. We’re available 24/7, 365 days a year…