What is Log File Analysis?

Log file analysis is the process of reviewing and analyzing your website’s log files to identify server-related issues and uncover opportunities for enhancing your technical SEO.

Website servers contain one or more log files that store the details of the browsers and crawler bots that visit them. The details captured in the log file will vary but usually include certain information about the visiting browser or bot, including:

- Their IP address

- Their user client (device)

- The URL they visited

- The time they visited the URL

- los HTTP request they sent to the server

- The HTTP status code the server returned

Bloggers review their log file details to understand how browsers and bots interact with their sites. This allows them to understand how visitors and bots use their site, identify technical SEO issues, and uncover opportunities to optimize their site performance, user experience, y SEO.

Importance of the Log File Analysis

Log files record every request your visitors make to your site. This includes requests from human visitors and bots, including search engine crawlers. This makes the log file crucial for bloggers who want to understand how visitors, bots, and search engine crawlers interact with their sites.

Site owners can analyze the details captured in their log files to understand the requests their visitors sent and how their site responded to those requests. This allows them to understand their site’s behavior, identify existing SEO issues, and uncover areas for improvement.

Where to Find Your Server Logs

You will find your server logs in your hosting control panel. Some hosts also have file managers where you can access and download your server log without accessing your hosting control panel.

However, note that you may have multiple log files. For instance, your content delivery network (CDN) will have a separate log file from the one on your server. Your host may also split your log file across multiple servers, while your hosting control panel may split your server logs into multiple files.

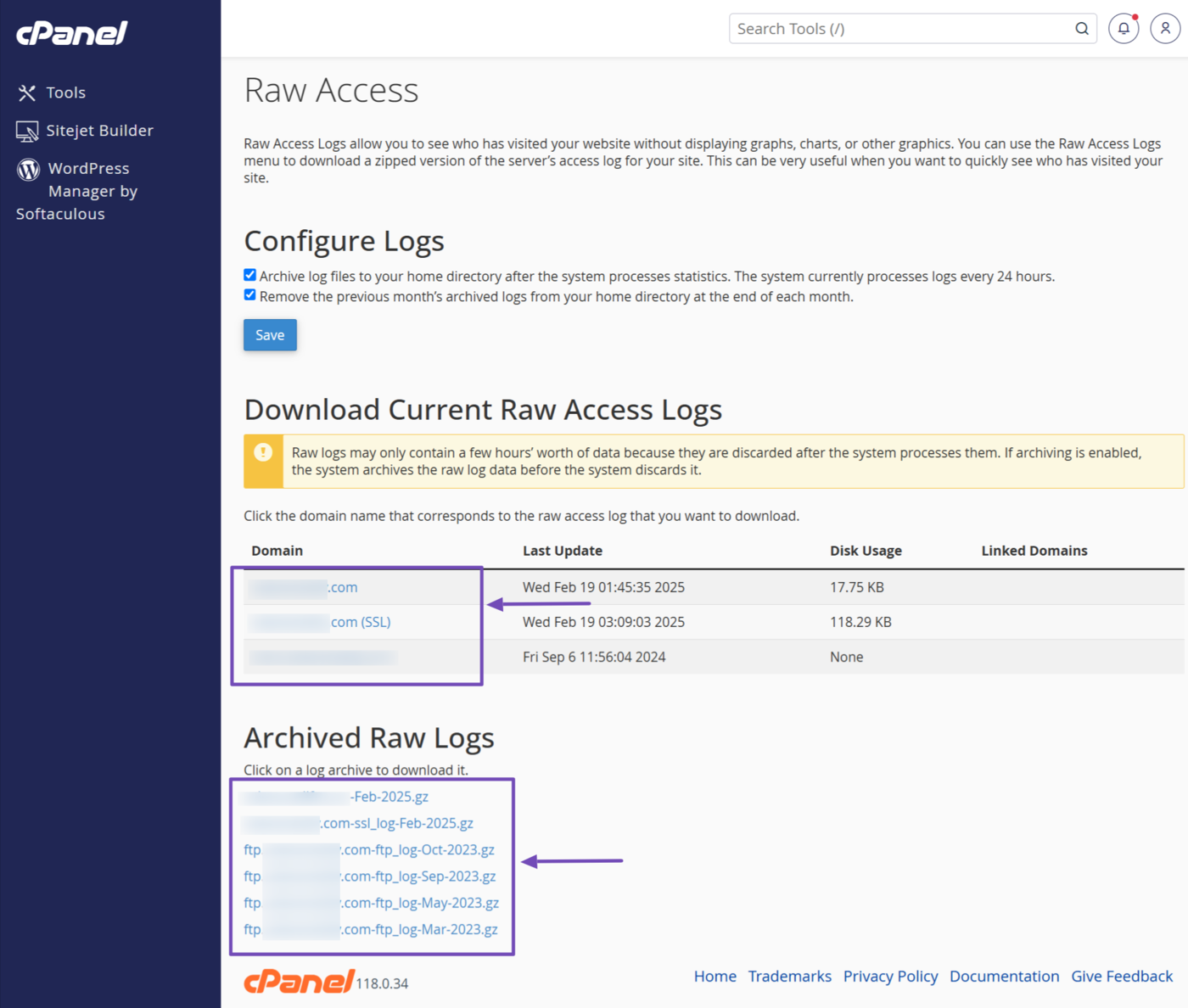

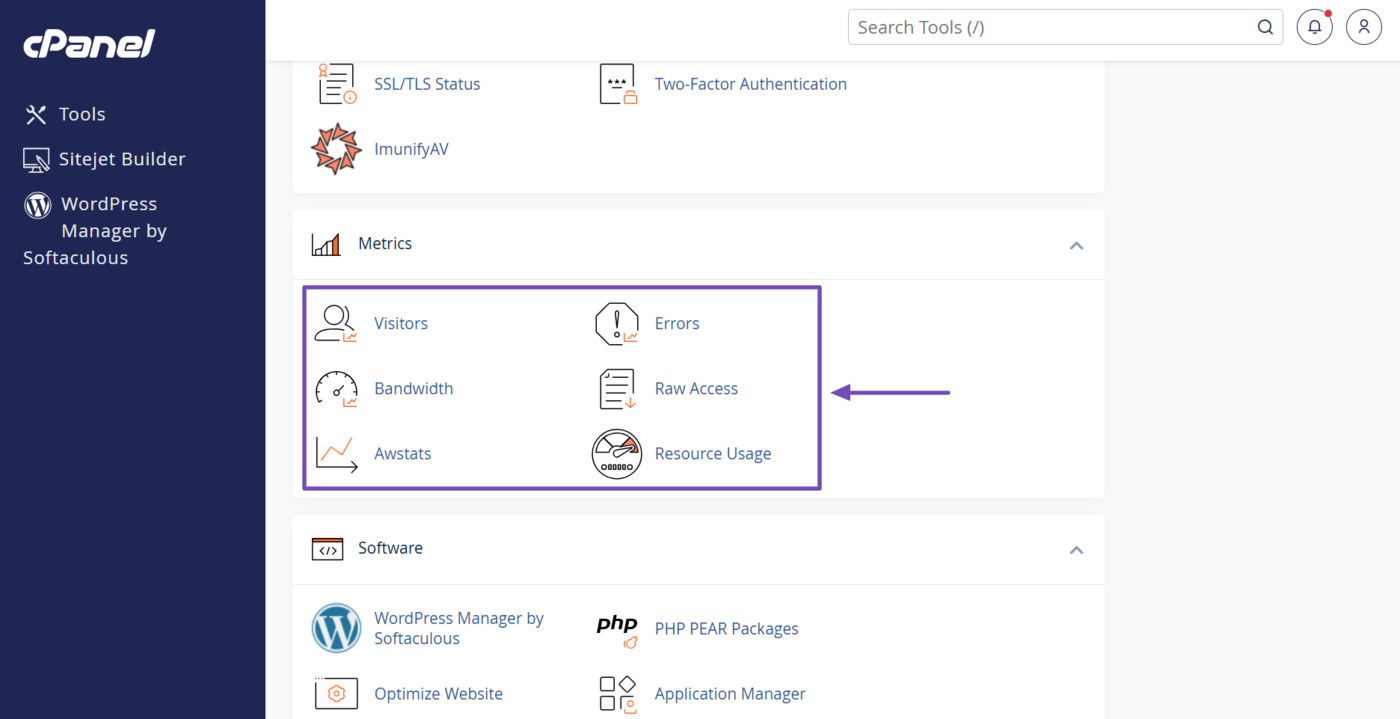

For example, cPanel splits your logs into multiple files and reports, most of which are displayed in the Metrics section. These files and reports are organized under the Visitors, Errors, Bandwidth, Raw access, Awstats, and Resource usage options.

Visitors: This shows the details of your visitors, including the number of visitors, their IP addresses, the pages they viewed, and how they found your URL.

Errors: This shows the errors (e.g., 404 No encontrado y Error interno de servidor 500) visitors encountered on your site and the URLs that returned the errors.

Bandwidth: This shows the amount of data transferred to and from your website over a specific period.

Raw Access: This shows every request made to your site along with the details of the browser or bot that made the request and the response your site returned. These files are downloadable for manual analysis or using third-party log file analysis software.

Awstats: This shows detailed reports about your visitors, including the number of visits and unique visitors, their location, the pages they viewed, the browser and operating system they used, and where they found your URL.

Resource Usage: This shows detailed information about the resources your site consumes on the server. It displays multiple reports, including CPU, memory, bandwidth, and disk space usage.

Overall, you should access all your log files wherever they are located to ensure you have a comprehensive report about how visitors access and use your site. If unsure, you may need to contact your host to understand which servers and platforms contain your logs.

Common Misconceptions About Analyzing Log Files

Several misconceptions can hinder your ability to properly analyze your log files. It is crucial to address and understand these misconceptions to gain deeper insights into your reports and get the most out of your log files analysis.

1 All Log Files Look the Same

It is common for bloggers to compile their log files from multiple sources, and each one can have its own unique formatting structure. So, log files do not look the same. Take note of this, as assuming they all have the same structure can lead to errors and misdiagnosis.

2 Log Files Should Only Be Analyzed After Security Issues

While log analysis is vital for investigating security breaches, its utility extends far beyond that. It helps monitor site performance, which is necessary for identifying, troubleshooting, and resolving technical SEO issues.

3 Log Files Should Only Be Analyzed in Real-Time

While real-time log analysis can be beneficial, it is not necessary or feasible for every site. Some sites generate vast amounts of log data, which makes real-time processing resource-intensive. Additionally, historical log data can provide valuable insights to help understand trends and make long-term plans.

4 Larger Log Files Lead to Better Insights

The assumption that an increased volume of logs automatically leads to better insights is misleading. This is untrue, as excessive logging can quickly overwhelm the blogger. So, filter your log data and prioritize which data should be analyzed. You should also focus on only keeping relevant and meaningful logs.

5 Log Files Are Reliable and Error Free

Log files can contain inaccuracies due to misconfigurations and bugs. They may also be inconsistent or incomplete for various reasons. Make sure to verify the integrity of your log data before starting your analysis.

6 Only Analyze Your Logs Files When Something Goes Wrong

Regularly analyzing your log data helps to identify potential issues before they escalate into significant problems. This saves time and costs in the long run and helps to prevent SEO and user experience issues that can harm your site.

7 All Log File Analysis Tools Work the Same Way

Different log analysis tools cater to various platforms, log types, and specific analytical needs. A log file analysis tool that works well for one kind of log may be ineffective or overwhelming for another. So, review your tool to ensure it works for your data and the type of data and insights you require.

8 You Can Manually Analyze Your Log Files

You can manually analyze your log files. However, this would become difficult or impossible with larger log sets. In such situations, you will rely on automated tools and log file analysis software to uncover your insights and reports. Some hosting control panels like cPanel also provide these reports.

Log File Analysis Best Practices

Analyzing your log file may be confusing and quickly become overwhelming, especially when doing it for the first time. However, once you understand why you are reviewing your log file and follow the best practices below, you can gain valuable insights into your site’s audience and technical SEO.

1 Focus on Search Engine Crawler Bot Activity

Pay attention to requests from search engine crawler bots like Googlebot o Bingbot. Analyze and review their crawl patterns to ensure they efficiently crawl your site and can access your key pages. You should also ensure they are not wasting your crawl budget on crawling irrelevant content.

2 Review Your HTTP Server Response Codes

Scan your logs for HTTP status codes like 404 Not Found and 500 Internal Server Errors, which indicate the visitor could not access your content. Once done, identify the URLs causing these errors and redirect them to working URLs.

3 Use Log File Analysis Tools

While you may analyze your log files manually, you will require tools to analyze large amounts of data. However, you can still consider using analysis tools for smaller amounts of data as they are better optimized for visualizing data and generating actionable insights.

4 Compare Your Log File and Google Search Console Data

Cross-reference your log file data with Consola de búsqueda de Google to validate crawl stats, identify discrepancies, and ensure search engines index your site correctly and efficiently.

5 Regularly Back Up Your Log Files

Your host, server, hosting control panel, and content delivery network may periodically delete your log data. To avoid that and ensure you can still access your historical data, set up your system to automatically back up your log file.

6 Regularly Review Your Log Files

Regularly review and analyze your log files. Set a consistent schedule, such as monthly or bimonthly. During these periods, look out for crawl errors, server errors, and unusual traffic patterns that may impact your SEO and user experience.