What is Crawl Delay?

Crawl delay is a directive that specifies how frequently a crawler can request to access a site. It is defined in the site’s robots.txt file but may also be specified in the server settings.

Crawling uses up a server’s resources. This could slow down the server or even overload it and cause it to go offline. To avoid that, some bloggers specify a crawl delay to let search engines know how frequently they can crawl their site.

However, not all crawlers obey the crawl delay directive. For instance, Bing and Yahoo obey it, while Google and Yandex do not.

As an alternative to the crawl delay directive, some search engines provide free services for bloggers to reduce their crawl rate. Others also encourage bloggers to return certain errors to reduce their crawl rate.

How to Specify a Crawl Delay

You can specify a crawl delay by adding the below rule to your robots.txt file:

User-agent: *

Crawl-delay: 10

In the above rule 10 indicates the duration in seconds. You can change it to any number of your choice. The crawl delay also applies to all crawlers. If you want to assign a crawl delay to a specific crawler, you would specify the crawler’s name.

For instance, the directive below will set a crawl delay for Bingbot.

User-agent: Bingbot

Crawl-delay: 10

Similarly, the directive below will set a crawl delay for Slurp, Yahoo’s crawler.

User-agent: Slurp

Crawl-delay: 10

Google does not obey the crawl delay. Instead, if you want to reduce the rate at which Googlebot crawls your site, you should return an HTTP 500, 503, or 429 status code from multiple URLs on your site. This is a temporary measure, and you should use it for two days at most.

If you want to reduce your crawl rate over a longer period, you should head to this URL to file an overcrawling report with Google.

How Bing Handles the Crawl Delay

Bing recommends setting the crawl delay between one and 30 seconds. If you set it to 10 seconds, for instance, Bing may crawl a page on your site every 10 seconds and six pages every minute.

Similarly, when you set it to 20 seconds, Bing may crawl a page every 20 seconds and three pages in a minute. However, this is not definite, as Bing will not crawl your site every time. So, Bing will likely crawl fewer pages than you expect it to.

You can also set different crawl delays for different subdomains. In that case, Bing will manage the crawl delay independently. So, you can specify different crawl rates for support.yourdomain.com and www.yourdomain.com.

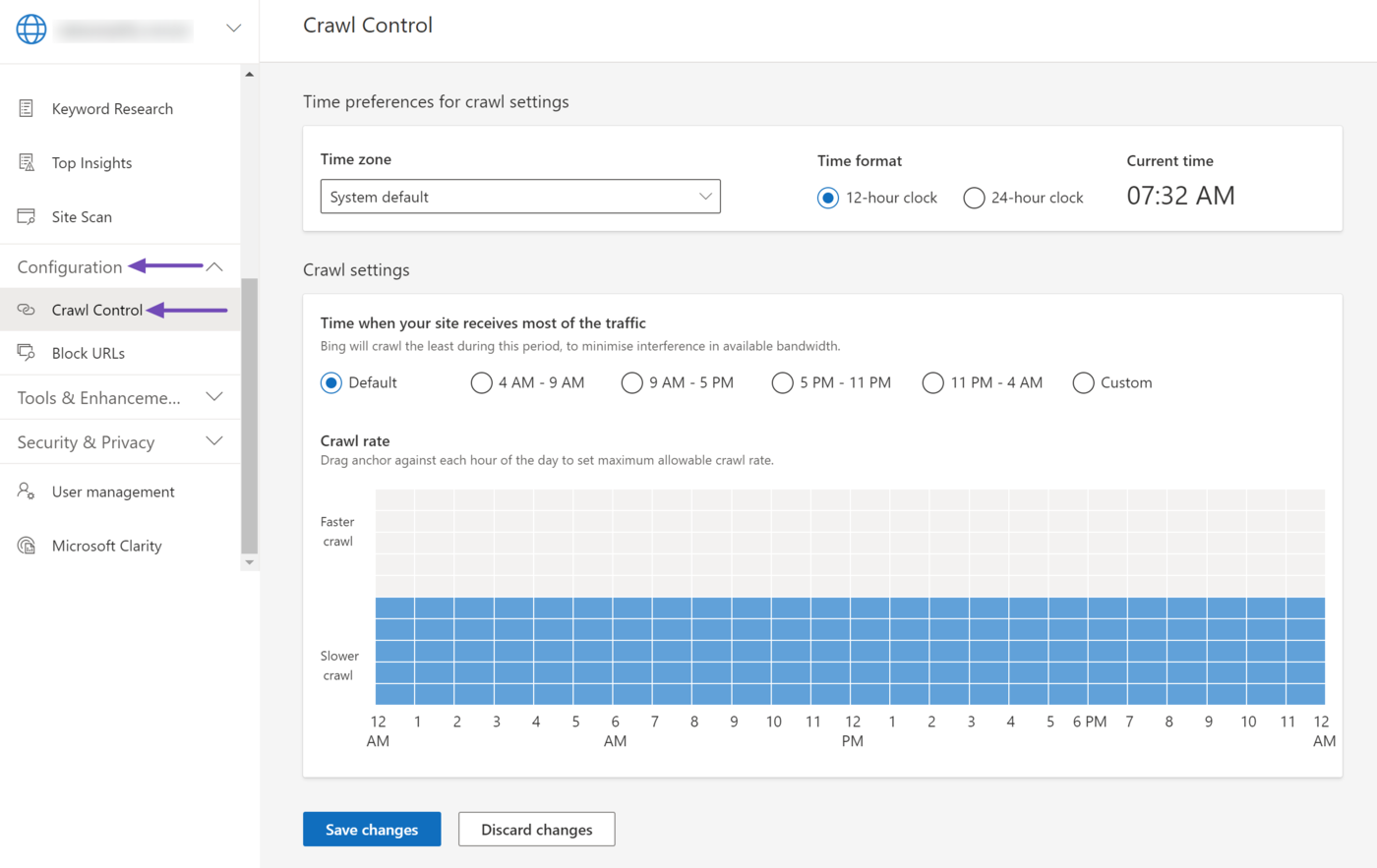

Herramientas para webmasters de Bing also allows you to set a crawl rate. To do this, go to Bing Webmaster Tools and select Configuration → Crawl Control. Then, use the available settings to set your crawl rate.

If you have set a crawl rate using the Bing Webmaster Tools and still specify one using the robots.txt file, Bing will obey the rule in the robots.txt file.