What is Deindexing?

Deindexing is the practice of removing a webpage or website from search engine result pages. The blogger or search engine may initiate the deindexing.

- A blogger can deindex content they don’t want on search engine result pages

- Search engines generally deindex content that engages in activities that go against their guidelines

Deindexed pages are removed from hakutulossivuilla. However, visitors can still access them by heading directly to their URL.

Bloggers have various reasons for deindexing a webpage. Typically, they do this to prevent low-quality or private pages, such as thank you, landing, author, and login pages, from appearing on search results pages.

Why Will Google Deindex My Site?

Google will deindex your site if you engage in practices that go against its spam policies. The spam policies are a set of guidelines that Google recommends site owners follow to ensure their content appears on Google Search results pages.

Google typically deindexes sites that engage in activities that violate its spam policies. However, it is necessary to note that a site is only deindexed after a human reviewer has reviewed and concluded that it was engaged in spammy practices.

You can lift the penalty by removing the spammy content and filing a reconsideration request in the Google Search Console.

How to Deindex My Content From Search Results Pages

You can deindex a webpage using the noindex tag or the X-Robots-Tag. However, you should know that not all search engines obey the noindex rule. Search engines that obey the noindex rule may also serve your site at locations other than the search results page.

How to Deindex Content Using the Noindex Meta Tag

The noindex meta tag blocks webpages from appearing in search results. You can deindex a webpage by adding the below noindex meta tag to the head tag of the page’s HTML code.

<meta name="robots" content="noindex">

This is what the code looks like when added to the head tag of the site’s HTML code.

<!DOCTYPE html> <html> <head> <meta name="robots" sisältö="noindex"> (…) </head> <body> (…) </body> </html>

The above noindex meta tag informs all search engines not to index the webpage. However, you can instruct specific search engine crawlers not to index the webpage by replacing robots with the name of the crawler bot.

For example, the noindex meta tag below specifically instructs Googlebot not to index the webpage. Meanwhile, other crawler bots are permitted to index the page.

<meta name="googlebot" content="noindex">

Talking of excluding crawler bots from indexing a page, Google has clarified that it only supports noindex rules directed at googlebot ja googlebot-news. It does not support noindex rules directed at its other bots.

How to Deindex Content Using the X-Robots-Meta Tag

The X-Robots-Meta tag is useful for blocking webpages and non-HTML elements like images, videos, and PDF files from appearing in search results. You can block a webpage or resource from appearing in search results using the X-Robots-Tag below.

X-Robots-Tag: noindex

The X-Robots-Tag is configured on the server side. This could be done through the server configuration files or using a server-side scripting language like PHP.

This is what the above tag looks like when included in a PHP code.

<?php

header('X-Robots-Tag: noindex');

?>

The X-Robots-Meta tag can also be used to exclude specific search engine crawlers from indexing a webpage or other non-HTML element. For example, the meta tag below instructs Googlebot not to index the webpage.

X-Robots-Tag: googlebot: noindex

How to Deindex Your Site and Webpages in WordPress

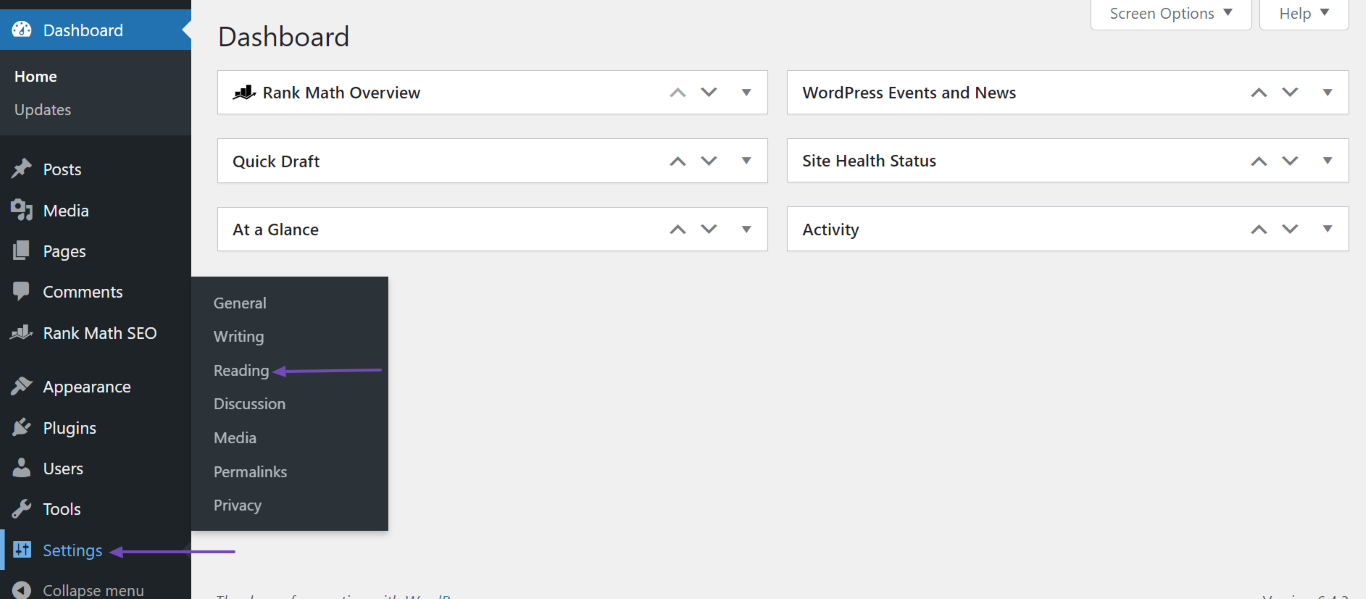

WordPress allows you to discourage search engines from indexing your site. To do that, head to WordPress Dashboard → Settings → Reading.

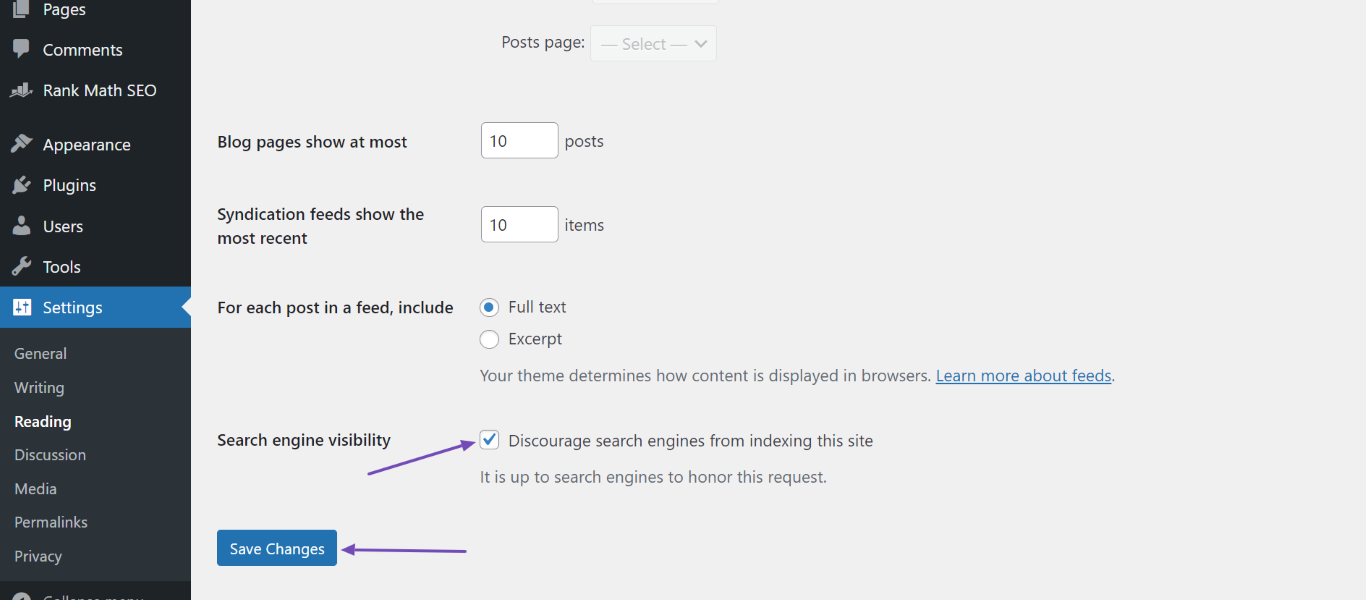

Once done, enable Discourage search engines from indexing this site and click Save Changes. Your entire site will now be set to noindex.

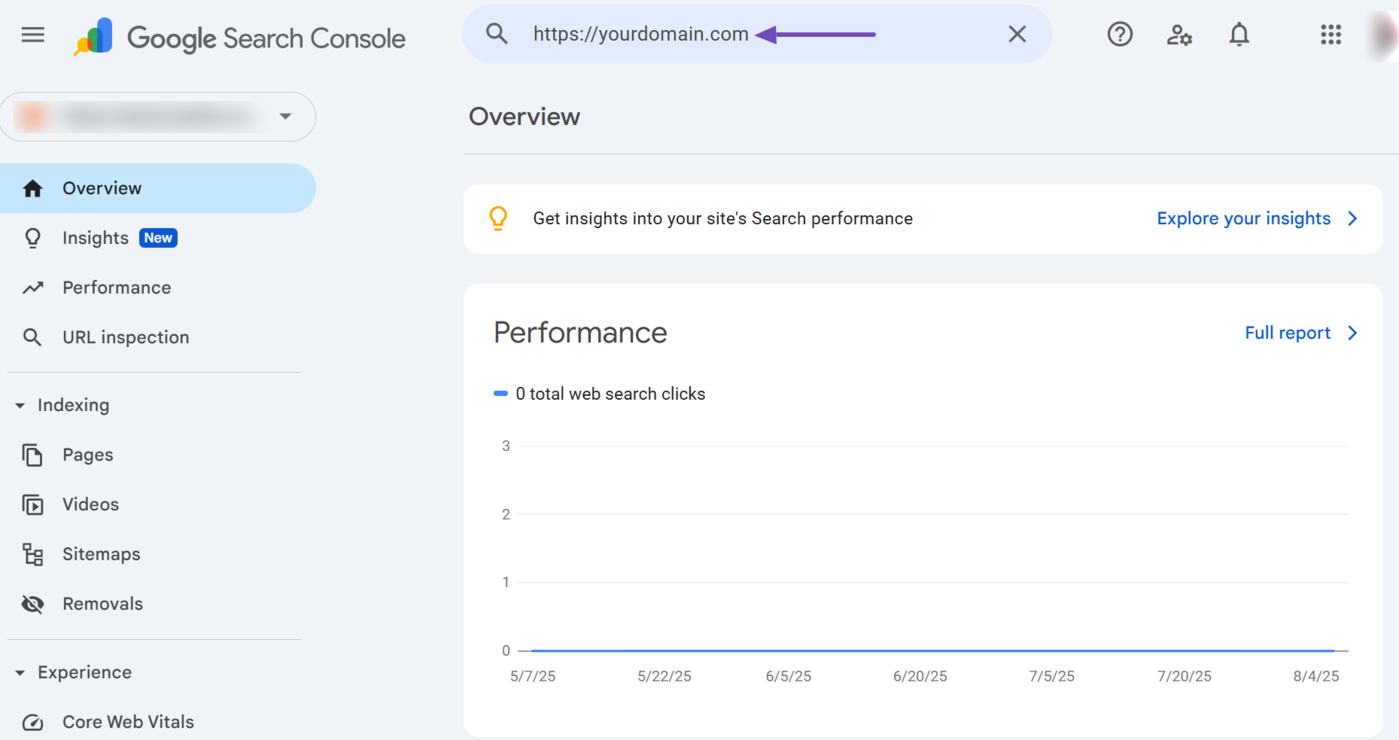

Now, request Google to reindex your site. To do that, head to the Google Search Console and enter your site’s URL into the URL Inspection field. Then, click Tulla sisään on your keyboard.

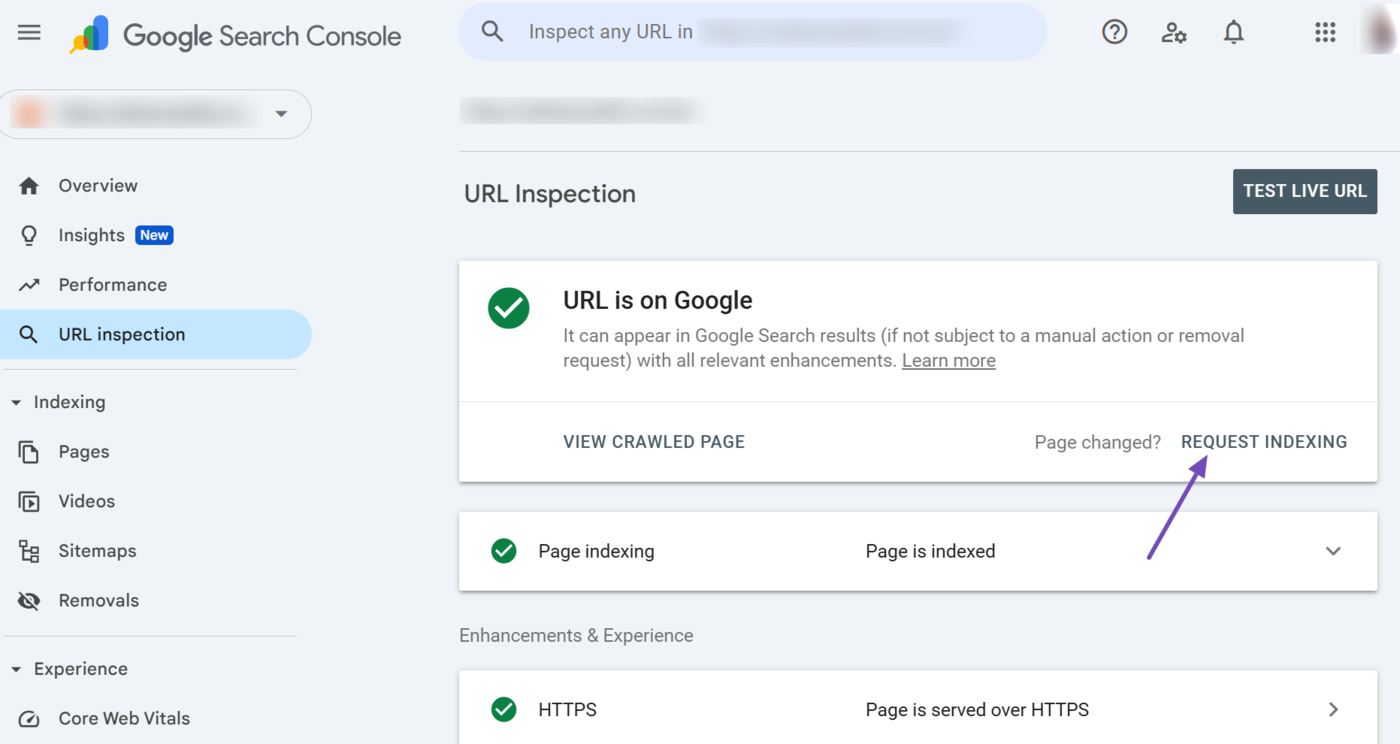

Kun olet valmis, napsauta Request Indexing, as shown below. Google will recrawl your site and deindex it when it detects the noindex tag.

That said, this method only works for deindexing your entire site. If you want to deindex your individual posts and pages, refer to this guide on setting your URLs to noindex.

Usein kysytyt kysymykset

1. Can I Add the Noindex Meta Tag to the robots.txt File?

The robots.txt file does not support the noindex meta tag. So, you should only include the noindex meta tag to the head tag of the HTML code.

2. Should I Block the Webpage in the robots.txt File?

You should not block the webpage in the robots.txt file. Google should be able to access and crawl the webpage; otherwise, it may index and display it in search results if it discovers its URL through some other means.

3. What Happens if I Add the Noindex Rule to a Webpage Over a Long Period?

Google will stop crawling any webpage that has been declared noindex for an extended period of time. This applies even when you add a follow attribute to the webpage.

4. Can I Deindex Duplicate Pages?

Do not deindex duplicate pages. Instead, use the rel=”canonical” tag link element to specify the original version of the webpage.